Category: Technology

There are components in business that ride the wave of time to have halo’s associated with it, when space, time and the event coincide, the companies that wear the halo accelerates its reach and hits the bull’s-eye. The resulting amalgamation of people, processes and ideas have time and time again celebrated success. This has been etched in stone, but what’s more contrasting and less surprising is that the components that create the halo is never the same and are subjected to disruption from around. There were innovations that elevated organizations and disruptions that have catapulted few others to the top. Particularly, the digital forces that once was touted as a disruption, which is now increasingly becoming the norm for the future.

The Whole Picture

Digital Transformation is not about islands of digitization like digital marketing, digital customer behavior or transformation from paper to digital media within an organization, rather it is a demanding process that requires considerable time, holistic thought and skilled people to guide the transformation process. The change has to be ideally driven from top-down, where the business leaders can put together the pieces of the digital puzzle thus enabling a rapid transformation in the business processes, activities and work models to move faster and efficiently to meet customer and business needs. This transformation of business by leveraging technology ultimately rests on the people who can drive the change into all layers of the organization.

Common Frontiers, Different Approach

Digital as the way forward is being experimented at varying degrees and varying pace by organizations. Some become more successful than the other to make this change a holistic one that touches the entire organization. The successful organizations show traits of digital maturity that touch different areas within an enterprise like Marketing, Operations, HR and Customer service; business models that unite these areas, and processes that embed efficiency through business automation, optimization, and management.

The foundation of successful transformation into the digital realm lies in organizational change, this change needs to address some key areas:

- For existing business, there is a need to take stock and understand why to transform, what to transform and how to transform. Once the reflection is carried out and you have figured out the answers to these questions, you should ideally start your mission to a digitally modified business. Improving on digital lines can help to have improved decision making by the use of big data, analytics, creating an effective corporate control, Risk optimization by automating manual labor, improved targeting with insights from customers, augmentation of traditional channels into digital and whenever wherever service propositions as well. Granted your journey would require much change from the status quo, and even you may not be as digital as a “Born Digital” company, but effective action can leverage the existing business, giving better reach to help multinational companies to truly have global operations.

- For an organization, Digital transformation process is multifaceted. Right from the beginning, it is essential that the organization has leaders with the potential to drive this change. The company has to make changes in their best practices in business architecture, BPM, continuous improvement, continuous innovation, crowd computing etc. Latest tools for robotics and sensors that can automate much of the mundane, so that the organization can focus on building lasting relationships with the customer. A mix of digital technologies, tools, and best practices can set the stage for Digital Transformation.

- The end part of the digital transformation is the customer experience. The models, the processes and the coherence of the activities carried out within an organization should ideally drive the customer journey with an emphasis on the physical and digital touch points. With Digital it is possible to effectively map this journey by understanding the way the customer interacts with business and brands, this invariably helps to find the best-fit solution for the customers. This is made possible through the combination of software, IoT, Big data, Mobile, CRM, Augments reality etc. In reality, a company may not be able to perform all these alone, it would have to seek partners that can run part of the show for them so that the companies can, in turn, focus on building their main offering.

The final touch points, like the interface, should invariably help the customer feel at ease and add touches of modernity. Looks aren’t everything, but when you and your competitor have the same product, looks, and a streamlined interface would come in handy

The Digital Wave doesn’t mean to throw away the method in which the business is being currently being conducted, rather it should dovetail and leverage the current best practices. When this is executed in the right way, the businesses can cater to a wider array of customers, speed to hit break even in shorter time, improve workflow employee efficiency within the organization. All companies irrespective of the segments they cater must invest proportionally so that risks can be mitigated and key opportunities are addressed in the most prudent way.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

In today’s hyper-connected age, with people always on the move, one would think not developing enterprises mobility solution is akin to the company shooting itself on the foot. Yet many companies hesitate to develop mobility solutions, wary as they are about misconceptions associated with it.

Here are the top six myths related to enterprise mobility solutions.

Myth # 1: Legacy Software is Too Entrenched to Displace

Legacy software is often the whipping boy for enterprises who refrain from developing mobility solutions. A key accusation is legacy software draining IT budgets, making it hard to fund new software.

The reality is that developing new mobility software doesn’t require massive investment. In fact, today’s rapidly advancing mobile solution landscape makes it possible to develop mobility solutions with little or no programming skills, with a fraction of the cost, time, and effort it takes to develop traditional solutions.

The problem comes when many enterprises try to throw in more servers as the solution to every IT problem they confront. Smart enterprises simply leverage scalable and affordable cloud-based solutions, which does not involve CAPEX costs.

Moreover, investment in mobility solutions often pays back for itself quickly, as mobility allows the company to become lean and mean, and spare the costs required to maintain legacy systems.

Myth #2: Enterprise Silos Make Mobility Solutions a Non-Starter

Most organizations that have been around for a while live with data and software silos. Sales, HR, Marketing, Finance, and other teams all use different tools, and operate disparate enterprise systems.

While data silos are indeed a drag and removing it can be game-changers for enterprise transformation, silos needn’t necessarily come in the way of developing mobility solutions. Installing a remote desktop server on the legacy system makes it easy to access data on it. If the legacy system doesn’t allow remote access, it is obsolete anyway. Also, many mobility apps can actually live with silos, and when information is required cutting across silos, there are several cost-effective analytic solutions now available that can access data from different silos and collate it in the cloud.

Myth#3: Potential Disruptions Create More Havoc than the Gains Mobility Brings

Many companies are inspired by the famous adage “if it ain’t broke, don’t fix it,” and refuse to dabble with mobility solution, least the upgrade disrupts what is already working. They consider the difficulty of migrating legacy business software and data, the downtime it causes to business critical processes, and the possible need to overhaul data center hardware and software. While such challenges were indeed big stumbling blocks in the past, the advancement of software-as-a-service (SaaS) solutions solve much of these issues. SaaS upgrades are in most cases done overnight, and does not require investment in new hardware or software.

Myth#4: Password Overload and Other Ills Breed End-User Resentment

No enterprise initiative succeeds with the support and cooperation of end-users. While enterprise mobility help end-user employees in many ways, they often raise concern about having to remember many passwords for the various enterprise apps they need to access. They forget passwords and raise tickets, dragging IT resources for account recovery.

While the concern is genuine, easy solutions are also available, most notably in the form of unified, easy single sign-on (SSO). Using SSO, a user needs to login just once, to gain access to all systems. SSO even facilitates integration with the user’s Google Account, or the credentials used to access Microsoft Office 365.

Myth #5: Inadequate Training and Support Doom Implementation

A common grouse against new solutions in general and mobility solutions in particular, is employees not getting enough training on enterprise apps to be productive.

The problem is actually misplaced, and has to do more with the design of the mobility solution than lack of training. Mobile apps are primarily meant to be easy, explicit, and self-evident. A “kitchen sink” (all-encompassing solution) mobile app, replicating the features and functionality of desktop applications rarely succeeds as it just combines the worst of both worlds. The ideal enterprise mobile apps rather contain just a few key functions, focusing on a specific process, and come with limited navigational choices and simple UX, primed for quick access.

Lopez Research Enterprise Mobility Benchmark estimate 60% of companies allowing BYOD, meaning employees would be familiar with device in the first place.

Myth #6: Enterprise Mobility Solutions are a Security Nightmare.

About one in every three companies had their sensitive data compromised through lost or stolen devices. To counter such damning statistics, most enterprises take inspiration from the adage “If you are not there to be hit, you cannot be hit,” and limit mobile access.

Security risks associated with mobility software are real, but also blown out of proportion. A big majority of the victims are either careless, or the company had weak security standards in the first place. Making risk management a key focus within the overall mobility strategy, and deploying effective mobile device management protocols, in combination with advanced encryption, modern authentication mechanisms, and even analytics mitigate most risks, and make mobility solution as safe as on-premises software.

Enterprises are waking up to the need to embarking on a sound mobility strategy, dispelling the myths associated with mobility. Lopez Research Enterprise Mobility Benchmark reveals 68% of companies ranking mobile-enabling the business as a top concern for 2015, a concern surpassed only by securing corporate data, and more than half of the companies planning to build 10 or more enterprise mobile apps in 2016.

Get in touch with us to fine tune your mobile strategy and develop state of the art mobile apps. Our cutting-edge enterprise mobility solutions not just enable your workforce to access much needed critical information anytime and anywhere, but also increase productivity and efficiency, and help you streamline your processes towards facilitating the customer.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

That the cloud is the backbone of this IoT revolution goes without saying. Reports from the January 2016 Consumer Electronics Show (CES) reveal most manufacturers equipping their devices, be it refrigerators, cars, dishwashers, running shoes, thermostats, and every other conceivable device massive cloud-based back end, to enable such devices to join the IoT bandwagon. The investment in cloud capabilities comes from the twin need to enable these devices to become “smart” by receiving and transmitting data, and to give these devices long-term value by making them instantly upgradable. The cloud facilitates both these IoT objectives.

For IoT to work, the connected device needs to communicate with other “things” and also with whoever holds the control strings. The “thing” connects with cloud servers to receive and transmit data, and store instructions and commands. At another pane, the “small” data from each connected yet disparate “thing” accumulate and become big data in the cloud. The convergence of huge quantities of critical data in a common cloud pane, accessible anytime and anywhere, enables real-time analytics.

The possibilities of such cloud powered real-time analytics to make life better, and for businesses to unlock new opportunities are endless. A few cases in point:

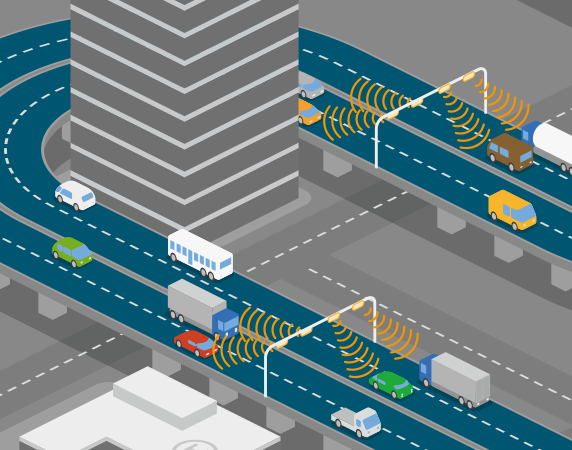

- Traffic controllers can source in small data from hundreds of IoT-enabled traffic signals, and subject it to real time big data analytics in the cloud, to ensure synchronized operation of traffic lights, and deliver seamless movement of traffic.

- Smart sensors attached to a pair of running shoes may clock the runner’s pace and notify them when it is time to replace the shoes. Small data from millions of such shoes would allow shoe manufacturers to predict demand accurately, and target prospects with precision.

- IoT-powered refrigerators can let the homeowner know when food products are reaching their expiration date, or when the stock is running out. It may also transmit data to the supermarket, who can then predict demand accurately, and get their inventory right. In fact, the refrigerator may send off a replenishment order to the supermarket automatically.

Millions of sensors embedded on things already monitor and track data of all hues, and deeper insights from the analysis of such big data facilitate better-informed decisions and responses, cutting across industries and sectors.

But is the cloud really necessary for all this? Can IoT make itself useful without the cloud?

The IoT ecosystem that enables the possibilities would not be possible without back-end cloud-based applications that churn, translate and transmit valuable intelligence.

With terabytes of data flowing in from millions of disparate connected “things,” the cloud is the only viable platform that can filter, store, and enable access of the required information, in useful ways.

The amount of incoming data from connected devices sensors often fluctuates widely. A sensor may generate 1000 KB of data in a day, 4TB on another day, and may not generate any data at all on a Sunday. The elasticity of the cloud enables scaling up or scaling down resources to absorb such wide fluctuations of data.

Hybrid cloud systems facilitate IoT-enabled services to communicate with geographically distributed back-end systems. This anytime anywhere connectivity is indispensable for real-time analytics, and to enable path-breaking business models and public services.

The cloud back-end does away with the near-impossible alternative of regularly updating thousands of individual “things.” Updates are a part and parcel of any system’s life, and is unlikely to go away anytime soon.

The built-in security of the cloud assures that data is protected and compliance standards are baked into the platform.

For all the enablers of the cloud, security remained a drawback. For instance, IoT enabled driverless cars, with cloud servers providing navigation instructions to car, but what if someone hacked into the public cloud server and send the passengers to Kingdom Come! Newer purpose-built clouds that device developers may share or use exclusively offers viable solutions to such security risks, combined with newer tools that protect from information leakage and iron out the few remaining chinks in the cloud armor.

The cloud and IoT being an inseparable couple cuts both ways. Even as the IoT requires the cloud to work, the cloud is evolving to better serve IoT. In fact, IoT is now a chief focus of the cloud.

As the number of connected devices increases exponentially, cloud infrastructure is on the threshold of a massive scale up, to accommodate the data swell. Unique, custom build hybrid cloud deployment models, leveraging the latest advances in software and networking, designed to meet the needs of customers’ unique workloads, enable IoT players to maximize the potential the cloud offers, without being hassled by availability, performance and security issues. Side by side specialized cloud-based service software, systems and skill development is in a boom phase, as is bandwidth improvements to facilitate transmission of data between “things” and the cloud.

A case in point, Microsoft’s recently launched Azure Functions, a “server-less compute,” allowing developers to create apps that automatically respond to events is one such path-breaking service launched recently. Microsoft’s Azure Service Fabric platform-as-a-service (PaaS) platform makes it possible to decompose applications into microservices, for increased availability and scalability.

The IoT revolution is well underway, but as things stand, only the tip of the IoT iceberg has been touched, with just 0.06% of things that could be potentially connected to the Internet being currently connected. However, growth is exponential, with 328 million new “things” being connected to the IoT ecosystem every month. The cloud infrastructure is gearing up simultaneously, to enable a whole new world of cloud powered IoT.

Fingent delivers technologies to enable your IoT solutions— cloud, networks and gateways, heterogeneous device support, systems capabilities, and data analytics. We provide industry-specific solutions that improve productivity and operational efficiency, with exceptional reliability and security. Learn about Fingent’s IoT System

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Work related injuries account for about $250 million in the United States, across several industries. And out of this, about 20% of them have been found to be lower back injuries. As a matter of fact, according to the U.S Bureau of Labor Statistics, back injuries accounted for a major number of lost employee work days.

Most organizations do know the importance of occupational health and safety practices, yet a lot of them still struggle to maintain it. Even though there are a number of systems available for it, organizations find it hard to decide the effectiveness of one over the other.

This is Where Wearable or Wearable Technology can Help.

Injuries can happen by chance, and sometimes they are unavoidable, like an accidental slip, or fall. Most other times though, one of the major causes of injuries is a lack of proper technique in performing a job. For instance, in the case of loading and unloading, often asymmetrical loading leads to injuries.

Wearables can help in this regard, by keeping track of and recording every single step taken, or move made, or turn or twist and promptly send back a report stating exactly where the workers pose a possibility for injury due to poor movements.

How Wearables can Help Improve Workplace Safety

Wearable technology has become so sophisticated these days that they can accurately measure human movements and postures like never before. Thus they are capable of providing companies with prompt, objective data that can be easily interpreted and turned into effective results.

Wearable technology makes use of certain small sensors that can be placed on the workers, as they go about their daily work routines. These sensors are capable of providing live data regarding their movements, which can be accompanied with high-definition videos that can help accurately single out the risk areas easily. Repeated problematic movements can be identified as a possibility of injury. Alternatively, if the worker hasn’t made any movement for a particular amount of time, the system can automatically trigger an alert to co-workers, to act on instantly.

For example, VINCI, a global construction company in the UK, actually implemented wearables in their workplace. They placed sensors on their brick-laying workers to collect real-time objective data, which resulted in lesser risks of lower back injury and even increased productivity and efficiency. Some of the detailed results were:

- Upto 85% reduction in time spent doing work involving a more than 20 degrees back bending

- An 84% reduction in lower back muscle activation

- A 17% increase in productivity, in terms of bricks per minute

- A 70% reduction in repetitive higher risk associated movements

GPS in Wearable to Ensure Technician and Client Safety

Tracking and monitoring technologies integrated to wearables ensure remote technicians remain safe. ReachOut, for example, helps integrate a right mix of mobile devices and wearable technology with location tracking capabilities. This provides a comprehensive in-the-field safety for technicians and allows clients to tackle tricky “lone worker” problems, no matter how remote the technician’s location.

To support the above facts, the Institute of Management Studies at Goldsmiths, University of London has found that the productivity of people using wearables has increased by 8.5%

There are many more cases where wearable technologies can be used to improve workplace safety. For example, several Australian engineers have developed certain “patches” that are programmed to detect toxic gases and UV radiation, which can immensely help workers in the chemical manufacturing industry.

Another instance is in Rio Tinto, where their coal mine truck drivers use a certain device that resembles a baseball hat, to measure brainwaves in order to keep a check on and avoid fatigue-related problems.

Thus, it is evident that wearable technologies can do more than just reduce work injuries.

On a technical note, aside from the fact that work injuries affect productivity and efficiency, unsafe work environments can also increase the insurance costs incurred by a company. Moreover, hazardous environments, without possible safety precautions, can affect employee morale in a huge way, and in turn, reduce employee retention rates.

Hence, it is in the best interest of every organization to invest in improving the safety of workers, and reducing the risks of injuries. Wearables are capable of providing this much-needed safety, by carefully monitoring and comprehending human movements as well as other environmental factors, thereby helping to maintain an optimum level of health for them and the overall business.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

The field service industry, as we all know, is at the peak of its transformative stage now. The technological advancements in the industry have been coming on, one after the other for the past few years, and now the rate of the revolution has reached an all time high.

As new tools and technologies continue to take over the field service market, let’s take a look at some of the major trends for the year 2016 and beyond.

- Predictive Maintenance – Last year, we saw the expansion of the Internet of Things as well as a more widespread use of analytics tools to handle all the big data. From this year on, it is time to put those tools to real use, and enhance the predictive capabilities of all connected devices. That way, a more predictive or proactive approach to service can be taken up, along with automation. For example, sensors could be fixed on equipments, and devices, that can notify its users when it needs a repair or when it is due for its regular maintenance. Thus, the whole process becomes automated, as it provides enough insight to the service providers to serve customers, without having them make a service request or call.

The Internet of Everything – The Internet of Things isn’t exactly a new trend to look out for in 2016, but we will definitely see an increase in its adoption by businesses in various industries, making it sort of an established industry best practice. According to Gartner, there are going to be about 26 billion devices, apart from smartphones, tablets, and computer systems, that are connected through the Internet of Things by 2020. Hence, the range of devices through which people are connected is getting bigger, which is exactly what the field service industry needs. The field service technicians need to be connected with the main office, with their colleagues, with the equipments used in the fields, as well as with their customers in real time. Information captured in the fields, with the help of such intelligent connected devices can then be used further, in predictive maintenance practices too.

The Internet of Everything – The Internet of Things isn’t exactly a new trend to look out for in 2016, but we will definitely see an increase in its adoption by businesses in various industries, making it sort of an established industry best practice. According to Gartner, there are going to be about 26 billion devices, apart from smartphones, tablets, and computer systems, that are connected through the Internet of Things by 2020. Hence, the range of devices through which people are connected is getting bigger, which is exactly what the field service industry needs. The field service technicians need to be connected with the main office, with their colleagues, with the equipments used in the fields, as well as with their customers in real time. Information captured in the fields, with the help of such intelligent connected devices can then be used further, in predictive maintenance practices too.

- Better Integration – As an implication of the “Internet of Everything” phenomenon, there is going to be an exponential increase in productivity and efficiency over the next few years. As mobile devices, equipments, telematics, and the offices are connected and integrated with field service solutions, there can be more chances or opportunities to work closely and communicate more effectively. A custom field service software will be the right way to go about it.

With the help of vehicle tracking, scheduling and routing facilities on mobile devices, field service technicians can take decisions in real-time remotely. It also helps them avoid issues related to reckless driving, control maintenance costs etc., all from a handheld device. All this besides the regular utilities like access to customer history and billing information, location information of colleagues and the like.

- Mobility all the way – We will see most field service companies embracing mobility in the years to come. Field service solutions will take different mobile forms like tablets and smartphones, customized to suit the different business models of companies. Here are some of Gartner’s predictions for 2016 and beyond:

– About 40% of the field service workforce will be mobile

– More than 65% of the mobile workforce will have a smartphone

– Field service organizations will buy up to 53 million tablets in 2016

- A Single Provider – Along with modernization and further technological developments in the industry comes an increasing trend of relying on a single provider for all the functionalities required for work. Most companies entrust a single company to deliver the software and skills to manage their work, employees, and assets. This calls for comprehensive, robust as well as flexible platforms supported by strong providers.

What other trends do you think can be added to this list? Share with us in the comments below. Let’s discuss.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Field service businesses are trying to capitalize on the advantages offered by today’s technologies. Mobile technology, being the most significant among them, has taken the industry by storm, offering increased productivity gains, streamlines work processes, improved field agent communications, increased first-time fix rate, shortened billing cycles, reduced overhead of paper-based field service management and so on. The infographic puts together some recent trends, impacts and state of mobile technology in Field Service Businesses.

Interested in learning more on enabling your field team with mobile technologies? We’d love to know your business requirements and help accordingly, contact our specialist team now.

Share this Infographic On Your Site

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

By now, we all know that we are living in the midst of billions of devices and machines that are connected to the internet and to each other. Need more evidence to believe it?

Well, Gartner predicts the number of internet connected devices and things to grow to almost 21 billion by 2020. IoT is in fact, huge and growing.

We humans are literally on the verge of being outnumbered by connected devices in the coming years. Now, I bet we didn’t see this coming when we first started using smartphones!

And get this, each of these devices, whether they are smart or wicked smart or even not so smart, are constantly collecting data through various sensors around them. In fact, a lot of our personal information is being accessed by our smartphones alone for crying out loud!

Is it almost time to start fearing the appalling situation of “Technological Singularity”?

Are we all going to get phased out by the intelligent machines one day?

We will have to let time answer that question, although we do have a hold over it through security measures.

Security and privacy are two of the most questionable aspects of IoT. Especially, now that the number of devices, as well as the amount of data are increasing rapidly, it becomes all the more difficult to monitor its use.

Connected everything – is it a boon or a bane?

When you take consumer devices like smartphones, think of the data it collects from your applications. It takes note of everything we do with our phones, wherever we go, including things like what we eat, what mode of travel we use, which route we choose, who we communicate with, what pictures we take and so on.

When it comes to fitness and health care, we have wearables and other smart devices that monitor our heart rate, our sleep time, our exercise routines and the like.

Likewise, we have sensors sending and receiving information on a number of devices we use on a daily basis. Combining all this information, along with analytics in the cloud, the value and amount of information that can be collected about our health and lifestyle is massive. The issue here is that, the level of technology has grown so much that, it surpasses the ability of law to control and protect how this data might be used. And needless to say, the endless number of devices being used, the amount of data generated and the applications that use the data, together make it worse. It is pretty hard to ensure security on such a wide scale.

Wearables

Delving a little deeper into wearables, a study in 2015 showed that around 41,000 patents were granted from 2010 to 2015 for wearable technologies. This only shows the pace at which wearables are advancing.They are seen more as a means to overcome some of the common issues of the modern society, and encourage people to move more. They help us in leading healthier lifestyles by tracking our sleep patterns, monitoring temperature, heart rate, glucose levels and the like.

For example, the Microsoft Band, makes use of galvanic skin response sensors, just like the ones used in lie detectors to track your activity levels, heart rate and more.

CES (Consumer Electronics Show) this year saw the first ever Bluetooth connected pregnancy test along with its app.

What we earlier thought to be science fiction, is a reality now.

In healthcare, though, we do have the HIPAA (Health Insurance Portability and Accountability Act of 1996) rules and regulations to monitor and control the sharing of health information. They are pretty strict as well. Devices like Fitbit are majorly being discussed around the world, on whether or not they violate the HIPAA rules. So, we do seem to have a certain safety element around health wearables.

Privacy?

According to a paper published by the Federal Trade Commission (FTC) on Consumer Data Privacy and the IoT, out of many issues that could affect data privacy, there are four basic ones that need our constant attention, namely security, data minimization, choice, and notice.

Data minimization is the practice of limiting the amount of data collected from various devices, to only what’s necessary for the application, and deleting any old information as well, all for privacy purposes. The paper mentioned that such data minimization affected innovation, as even though collecting extra information may not seem to be useful at present, it may help future applications and functions, and restricting such possibilities affects the chances of better, improved applications for the consumers.

Notice and choice refer to the information given to the consumers about the amount and kind of data they are going to be sharing, and the option for them to opt in and opt out.

How many times have you seen an application asking for access to your smart phone’s camera, contacts, location and the like?

Even an app that doesn’t seemingly need to read your contacts, like a fitness app, prompts you for such information. Sometimes these permissions are hidden in clickthrough approvals of end user agreements necessary for the app to function or to activate a device or an app.

And many other apps claim to use “bank grade security” and “encryption” as protection measures for your data, but seldom do people know even the meaning of those terms.

Hence, the bottom line remains, that security and privacy are indeed two important aspects of IoT and data collection. But a lack of standards, and rules to ensure adherence to the same makes it an ever growing concern in the IoT era.

What are your thoughts on security and sharing of data across devices? Let us know in the comments below.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

With the rise in the use of mobile phones and other smart devices, came the need for software to be ubiquitous. The amount of big data being dealt with, by enterprises across industries has also been on the rise. While we were busy wanting to access everything from everywhere, cloud computing was the technology that made it all manageable for companies and available for us.

Everyone wants to access everything from everywhere.

Cloud computing was introduced in 2000, to meet all of these demands, and provide people with ubiquitous, convenient and on-demand access to the network. It basically made use of a pool of shared configurable processing resources, such as the network and servers, that could be promptly provisioned and released with very little management efforts. The Internet of Things (IoT), also helped a great deal in keeping devices connected and enabled sharing of resources.

However, now we are faced with even higher levels of data, demand for flexible provisioning of resources as well as ubiquitous, on-demand access to the network. This explosion of data usage is what led to the emergence of a new, innovative computing mechanism that can provide healthy and strong real-time data analytics to clients, known as Fog Computing.

Fog computing, also known as fogging, is a kind of distributed computing system or infrastructure, in which only some of the application services are handled by the remote data centre (such as the cloud) while the rest of the services are handled at the network edge in a smart device. It is basically aimed at improving efficiencies and reducing the amount of data that is sent to the cloud for analysis, processing and storage.

While fog computing is often chosen for efficiency purposes, it can also be used for security and compliance reasons.

How it works

In a fog computing environment, most of the data processing happens in a smart mobile device, through a data hub, or on the edge of the network, through smart routers or other gateway devices.

In the normal case, a bundle of sensors generate immense volumes of data and send them to the cloud for processing and analysis. This process is not so efficient as it requires a huge bandwidth, not to mention the costs. The back-and-forth communication between the sensors and the cloud also affects the performance, leading to latency issues.

In fog computing, the analysis of data, decision-making as well as action, happen simultaneously, through IoT devices, and only the relevant data is pushed to the cloud for processing.

The architecture

A fog computing environment comprises three basic components:

- The Internet of Things (IoT) verticals or devices

- The Orchestration layer

- The Abstraction layer

All of these layers include physical as well as virtual systems that play an important role in the efficient and dynamic functioning of the fog computing system.

The IoT verticals consist of tenant applications or basically products which are rented for use. They support multi-tenancy, which enables multiple clients to host their application on a single server or a single fog computing instance. This is a very useful feature, as fog computing environments are all about flexibility and interoperability.

The orchestration layer is for data aggregation, decision making, data sharing, migration and policy management for fog computing. It consists of different APIs such as Data API and Orchestration layer API. The orchestration API holds the major analytics and intelligence services for fog computing. It also includes the policy management module, which is meant to facilitate secure sharing and communication in a distributed infrastructure.

The abstraction layer provides a uniform and programmable interface to the client. Like the cloud computing model, this layer makes use of virtualization technologies and provides generic APIs that clients can apply while creating applications to be hosted in a fog computing platform.

How does fog computing find its relevance in the real world?

Consider the example of Smart Transportation Systems (STSs). The traffic light system in a state, say, Chicago, is equipped with sensors. On an occasion of celebration, the city is likely to experience a surge of traffic around specific times, depending on the occasion. As and when the traffic starts coming in, data gets collected from the different individual traffic lights. The application that is developed by the city to monitor traffic and adjust light patterns according to timings, which runs on each edge device, automatically takes control and changes light patterns in real-time. They work according to the traffic patterns and obstructions that rise and fall, collected by the sensors. Thus, traffic is monitored flawlessly.

In the normal case, there is not much traffic data being sent to the cloud on a daily basis for analysis and processing. The relevant data here is the data that is different from the usual scenario, that is on a day of celebration or a parade. Only this data is sent to the cloud and analyzed.

Why “fog” computing

The word fog in fog computing is intended to convey the fact that cloud computing and its advantages need to be brought closer to the data source, just like the meteorological relevance of the words fog, meaning clouds that are closer to the ground.

By all means, this technological innovation is something that the world really needs now. For all those businesses who have been waiting to find the perfect solution to your data issues, fog computing is definitely the way to go.

Image credits: Quotesgram, Atelier

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

For a long time now,there has been a seemingly prevalent trend in the world of business. Leading companies or market leaders often fail to stay on top of their industries, as technology or market conditions suddenly change.

For example, Xerox was once the market leader in copier machines, but later on, Canon took over the small-copier space. Wal-mart took over the once popular Sears.

In the software industry too, there was once a time when Apple computers brought in and popularized the idea of personal computing and literally established the standards for user-friendly computing as well. But they went behind by almost 5 years, in the introduction of portable computers from the market leaders in this space.

As you can see, this has been a consistent pattern in most industries. Established companies often invest heavily and aggressively in technologies that are essential to retain their existing customers, but seldom foresee the directions of their future journey together. They fail to focus on investing in certain other advanced technologies that might be important for their future customers. Hence even though they are successful in retaining their current customers and dominating the market, their slack on meeting future demands of customers brings them down.

Why does this happen?

The real reason for losing the wave

From a broad perspective, it may be the result of several issues combined, like a lack of proper planning and execution, short-term investment issues and internal issues too, such as bureaucracy, monotony, inefficiency and the like. But the main reason for this pattern is actually right at the heart of the paradox. All established companies fall prey to one of the most popular and undeniable principles of management – being close with customers.

Even though most business managers have everything under control, customers have a certain dogmatic power over a company’s investments. Businesses always make it a point to direct their activities to what customers want. Whether it’s the introduction of a new product, or new technology or venturing into new channels of distribution, the first priority of businesses is to look up to what customers want – is it something that their customers would want? Is it going to be profitable? Is the market big enough? – the questions are many.

This is how most businesses fall into the cycle. It’s fine as long as the customers are satisfied. But the problem arises when the customers reject a certain new technology or product, because it doesn’t meet their needs as effectively as the company’s current product or technology. In the end, they find themselves being burned by the very technologies or products that their customers led them away from.

From an ongoing study of technological changes, it has been consistently found that most established companies stay ahead in their respective industries, in developing new technologies that address the performance needs of their next-generation customers. However, industry leaders don’t really focus on being in the forefront of commercializing new technologies that don’t meet the initial demands of mainstream customers and focus on appealing to emerging markets alone.

In order to remain on top, businesses need to identify those technologies that serve the next-generation needs of customers, and pursue them only while keeping themselves protected with the technologies and processes that serve mainstream customers as well. There is only one solution for it – creating organizations that remain independent from the mainstream business.

Striking that fine balance, takes time, effort, and most importantly many failures. We might be able to see more businesses in future, embracing this approach.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

In the wake of the age of mobility, and increasing demands for high-quality web and mobile applications, DevOps is fast becoming the most reliable and preferred strategy for most organizations. It’s cross-functional collaboration and speed are making it more like a go-to strategy, as it enables quick delivery of software solutions, which is precisely what is needed in the current business scenario. Moreover, as businesses are in the process of digital transformation around the world, an agile environment is only a necessity, as a lot of business aspects linked to the transformation process like growth, customer loyalty, and satisfaction, competition differentiation etc., need to be taken care of well. DevOps helps in creating such a responsive IT environment, enabling organizations in rapid development and deployment of high-end software solutions.

But how far is DevOps successful? How can an organization judge for itself whether it’s DevOps initiative was, or will be successful? Sure, a lot of organizations are following it, but given the transformational scenario that most organizations are in, it could be difficult to measure its success, because DevOps by itself is not exactly a formal framework and it doesn’t provide a lot of guidance. Organizations simply have to learn into it.

There are some metrics or elements that accurately help in measuring the success of DevOps. Most organizations make the mistake of measuring a number of elements, which often may not be necessary and retreat to ones that can be easily collected. But, the issue is that some of these metrics that matter for DevOps may not be as familiar to organizations. For example, the speed of deployment, rate of change and the like are metrics that are only applicable to DevOps, which in turn is a comparatively new concept for organizations.

So what are the metrics that should be considered for DevOps?

The elements that matter

Importantly, we need to consider people-centric metrics, and process and technology centric metrics as well for DevOps. Out of these, people-centric metrics are probably the most difficult to collect, but often prove to be the most useful. They can actually be one of the most powerful influencers on a DevOps program. Hence, internal metrics like staff training and retention rates should be strongly considered.

When it comes to

When it comes to process metrics, we need elements that help to measure the effectiveness of interlinked processes throughout the delivery process. It helps to see if the collaboration is effective. It also helps to identify deficiencies within the processes that need more work.

Technology metrics are those such as uptime and capacity to support expected web traffic, which basically help in reviewing the technologies used in the DevOps process. It also includes insights derived from failures or errors like failed releases, code defects and the like.

Another important thing to note while determining metrics is to sustain a comprehensive or holistic approach. Sticking to just one or two aspects of measurement like operational or developmental metrics, may not provide the required results. As a matter of fact, there are chances of it having a negative impact on behavioral improvements in the organization.

To start off, here are a few dimensions which can be used to measure the effectiveness of DevOps:

- Collaboration and sharing – This literally forms the base of a DevOps program and is hence the most important measure. They help in judging the acceptance or resistance to the program, on an ongoing basis, which is a valuable indicator of the effectiveness of DevOps. As mentioned before, some of the metrics in this dimension might be easier to collect such as staff retention rates, training, and turnover, while others like employee morale might be more difficult. Another aspect to look into here, is how metrics in other dimensions are affecting elements in this dimension. For example, how far are MTTR (Mean Time To Repair) changes affecting employee morale, retention, absenteeism and the like. Automated surveys and other means to get employee feedback are other areas that may be considered for this dimension.

- Efficiency – This dimension mainly focuses on developmental and operational aspects. The capacities and capabilities. Moving from the traditional ratios like server to admin, businesses are now using customer-centric ratios like FTE (Hours worked by a Full-Time Employee) to customers. This value is expected to increase in the coming years, as more enterprises are now moving to automation and the cloud. Other metrics such as examining costs on an application basis and cost of release are good measures in improving data center efficiencies.

- Quality – This dimension focuses on elements related to service delivery. For example, metrics like percentage of applications rolled-back because of code defects. Now this metric could initially be high for organizations that have just begun on their DevOps initiative. This is probably due to extra time required for the purpose of making the new processes effective, and other related things. These metrics might give other useful insights when combined with other indicators. For example, the rate of rollbacks when combined with the change volume indicator, could provide more important insights.

These are some other metrics in this dimension:

Cycle time – time required to complete a stage or several stages within a project

MTTR – average time taken to restore a service or repair a defective part

- Business value – This dimension is focused on external things – like the impact of DevOps on meeting business goals. It includes elements like customer value or loyalty, time to market and the like. The lead time too provides businesses with an analogous metric that helps to know how well DevOps is meeting the need to deliver high-quality software services fast. This is specifically important as a long lead time may mean more defects in code and issues in testing.

The Net Promoter Score (NPS) is another important metric, which is a simple method to measure customer loyalty. Even though this measure has been traditionally used for marketing purposes for a long time, customer loyalty is also affected by the fast and timely delivery of software services through high-quality web and mobile apps.

All these metrics contribute towards analyzing the effectiveness and success of DevOps. Keeping track of these, can help an organization in deciding whether to continue with the program or do the needful to make it further effective.

Image credits: Prashant Arora’s blog

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new