Category: Technology

What 2020 holds in store for software testing automation

Enterprises are constantly on the hunt for trends that improve their application’s effectiveness, expedite development cycles, reduce downtimes, and improve cost savings. In such cases, they often resort to methods that can automate mundane testing procedures. Why is it the right time to leverage test automation trends? Here we discuss a few software testing automation trends expected to dominate in 2020.

The automation testing lifecycle

Automation testing provides testing teams the ability to improve the coverage of their software tests and additionally offers improved quality, cost savings, shorter testing cycles, and a multitude of other benefits. To reap benefits out of automation techniques, the key is to incorporate the right skills, planning, as well as the right testing tools.

Let us walk through the various phases involved in an automation lifecycle:

- When and what and how to automate?

- Selecting the right tool

- Test planning, designing, and strategy selection

- Establishing the testing environment

- Development and execution of the automation test script

- Analytics

- Report Generation

Related Reading: Understanding The Different Types Of Software Testing

Factors that drive the adoption of test automation in 2020

- Ability to strategize and plan.

- Finding resources and skills to make use of automation tools and frameworks.

- Ability to assess related risks.

- Exploration of tools.

- Providing instant feedback so that developers can gain insights into various areas that require improvement.

- Conducting multiple tests throughout various phases.

- Increasing efficiency to reduce risks.

- Reducing defect fixing time and effort.

- Investing more time for the development and enhancement of features.

- Analyzing strategies to capture data to assess various releases.

- Ensure API testing to determine the functionality, performance, security, as well as reliability of the application tested for.

Automation testing trends to watch out in 2020

1. Test automation to improve quality for Agile and DevOps

As businesses face constant pressure to meet the changing customer demands and expectations, agility becomes the key to success for coping with the transformation. Additionally, enterprises lookout for agile processes and software delivery approaches such as DevOps to ensure a faster delivery time of applications to the market. Agile-DevOps transformation has brought about higher levels of quality, flexibility, efficiency, and productivity to achieve faster release cycles, improved quality, and increased ROI.

Related Reading: Myths About Load Testing in Agile Environment

2. Usability testing to ensure improved user experience

Mobile and eCommerce applications are great enablers for businesses today. User Interface, as well as the operational flows, play a critical role in determining whether the visitors continue to be users or not. A quick application loading time can enhance your brand loyalty and improve customer experience and retention.

3. Big Data testing to tackle huge volumes of data

Historical data helps enterprises gain critical insights on future plans and objectives and helps them to be proactive through predictive maintenance, machine learning, and AI techniques. Mining structured, as well as unstructured data, are hence important for effective testing.

4. IoT testing for well-connected devices

According to the latest reports published by Statista, the entire installed base of the Internet of Things connected devices is forecasted to amount to 75.44 billion worldwide by 2025. This figure was just 6.4 billion during the year 2016. This increase in the figures illustrates the massive requirement for effective IoT strategies in test automation. This testing includes testing of OS, various software and hardware of the IoT connected devices, different communication protocols, and so on.

5. AI and ML testing for technological innovations

The global AI software market is forecasted to grow to $14.7 billion by the year 2025. AI and ML technologies such as gesture recognition and speech recognition are taking over the world by storm. Similarly, technologies such as neural networks and predictive maintenance also require high-quality testing methodologies for technological innovations.

6. Blockchain Testing

Blockchain Testing enables smart contracts and prevents fraudulent transactions, especially when dealing with digital currencies such as Bitcoin. Blockchain debugging is thus crucial for enabling streamlined and smooth financial currencies.

7. Cyber Security Testing

Cyber vulnerabilities increase day by day and tackling them requires effective testing practices. (CEH) Certified Ethical Hackers with key security tools and technologies can safeguard applications from malfunctions and cyber attacks.

8. RPA Testing

RPA (Robotic Process Automation) testing ensures enhanced output, facilitates high-end performance and reduces the efforts required while performing end-to-end testing. For instance, robots can enhance a workspace with its capability to perform redundant tasks. RPA testing is thus crucial for effective applications with faster development cycles.

Related Reading: Quality Assurance in Software Testing – Past, Present & Future

Integrating automation testing into your workflow requires you to take into account how it will impact the people, process, and technology of your organization. It’s also crucial to measure if automation fits into the cycle of continuous integration and delivery and how it will merge with your software development lifecycle. Fingent helps you reap the benefits of test automation. Contact us to know more.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Industry 5.0 Is All Set to Highlight the Significance of Humanity at Workplace******

“The industrial revolution was another of those extraordinary jumps forward in the story of civilization.”

– Stephen Gardiner, English bishop, and statesman.

Standing at the threshold of the 5th industrial revolution (also known as Industry 5.0 or 5IR), we are poised for another jump forward. And yet, we have seen in the past that the march of these successive industrial revolutions has left an element of dehumanization in their wake. Will that be true of the 5th industrial revolution as well? Experts disagree, which is good news indeed.

This blog takes a look at how the 5th industrial revolution is bringing the focus back to humanity.

A Phenomenal Journey Towards the 5th Industrial Revolution

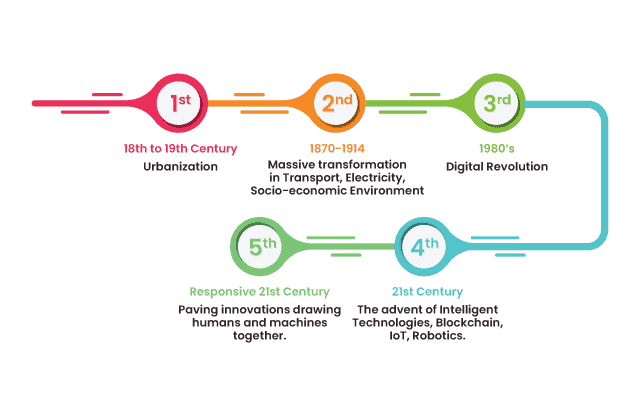

The journey started in 1760 when the first industrial revolution ushered in urbanization, providing work for people at factories.

The second industrial revolution changed the socio-economic situation of the world, improving transport and communication. Also, better automation provided better employment opportunities.

The third industrial revolution saw the invention of the computer, enabling automation in both the office and production lines.

The fourth industrial revolution brought in better communication and connectivity across the globe. It saw the advent of intelligent technologies like robotics, blockchain, IoT, and more.

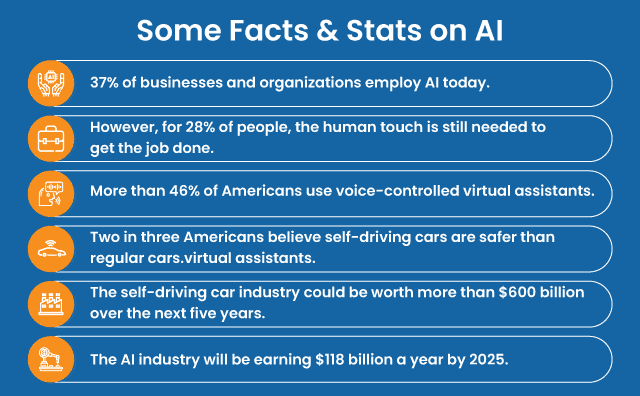

Artificial Intelligence, Robotics, Augmented Reality and more such technologies have changed the technological landscape like never before. Let’s not forget how Artificial Intelligence has tremendously transformed, customer experience, in recent years. Here’s a look!

That leads us to the question: What can we expect from the 5th industrial revolution and will it help improve humanity?

Before that, let us find out what the 5th industrial revolution is exactly.

The 5th Industrial Revolution

Though different experts have different explanations about what the 5th industrial revolution is, most of them agree that the 5th would be based on the 4th.

Why?

A look at history shows that each revolution became the foundation for the next revolution. We can thus expect the 5th revolution to be built over the 4th revolution, but it will go one step further.

An article developed in collaboration with the World Economic Forum puts it this way: “In contrast to trends in the Fourth Revolution toward dehumanization, technology and innovation best practices are being bent back toward the service of humanity by the champions of the Fifth … In the Fifth Industrial Revolution, humans and machines will dance together, metaphorically.”

(Sorce: DataProt)

With this in mind, we can foresee the 5th industrial revolution to be an AI (artificial intelligence) revolution with the potential of quantum computing which will draw humans and machines together at the workplace. It is about harnessing the unique attributes of AI by recruiters and employers who in effect will be more equipped to make even better and more informed decisions.

“AI is becoming more prominent with 50 percent of workers currently using some form of AI at work. However, 76 percent of workers (and 81 percent of HR leaders) find it challenging to keep up with the pace of technological changes in the workplace.”

How Industry 5.0 Brings Back the Focus to Humanity

1. Uncaging recruiters

The 5th industrial revolution (5IR or Industry 5.0) places greater importance on human intelligence than ever before. Even today in the war on talent, AI enables recruiters to capture better profile matches. 5IR brings huge benefits in providing the candidates with a more personalized experience in their job search.

“According to an article in Forbes: 34% of HR leaders are investing in workforce learning and reskilling as part of their strategy to prepare for the future of work!”

5IR will also uncage human resource teams from the major part of daily administration. This will give them time to fine-tune and meet talent requirements, allowing them to focus on the growth and productivity of their organization.

Read more: Learn why you need to develop a custom platform for remote employee hiring and onboarding.

2. Puts women at the forefront

Martha Plimpton said, “Women have always been at the forefront of progressive movements.” This is true even with regard to 5IR. It will play a critical part in shaping the role of women. As businesses hire unbiasedly, women and girls worldwide will be empowered.

3. Prevents the repetition of Engels’ pause

During the first industrial revolution, though per worker output expanded, real wages stagnated for about 50 years. This stagnation was called Engels’ pause. It is estimated that 5IR has the potential to prevent such stagnation. Though 5IR will take away mundane and repetitive tasks, it opens the way to curiosity, creativity, empathy, and judgment ensuring a balance between people and technology.

4. Changes the way we work

Most of us no longer want to work at 9-5 jobs. The way we work is dramatically changing. As our preferences in job and timings change, companies are forced to change too. Surely 5IR will change this even further.

New employees will no longer have to read a pile of documents or sit through meetings to get all the current and accurate information. This would mean that you can onboard them very easily without having to invest a great deal in training. 5IR will help companies make the most of existing resources helping management teams to focus on more strategic tasks.

The recent pandemic situation has also paved the way for remote working culture, which is now gaining fast popularity. Innovative cloud platforms, such as InfinCE, are supporting this rapidly evolving work culture with streamlined collaboration and smooth communication solutions. Enabling enhanced communication channels, video conferences, and a secured environment for task sharing, monitoring, and collaboration; InfinCE and such other integrated cloud platforms are revolutionizing the work culture of the future. Let’s not forget that with the revolutionary 5G technology, remote collaboration is expected to take new heights with virtual meetings and augmented solutions. Here’s a deeper look into how 5G is reinventing the way we work.

Although the advanced communication channels and collaboration apps, along with 5G technology is creating an impact on work culture, experts call AI and Remote Work, a match made in the future. Why? Here are a few reasons!

- Monitoring the outputs of the remote workforce has always been a concern. But with machine learning and artificial intelligence, tracking remote tasks in real-time is not just possible but also effective and seamless.

- The HR management has to comply with company policies and other legal requirements before recruiting, and these tasks prove quite tedious. Utilizing intelligent technologies to create remote positions can streamline these procedures and turn down the load of the people management department.

- Implementing survey-based tools can simplify collecting reviews and reports on employee performance which in turn can help strengthen the workforce of a company.

Intelligent technologies are sure to take a lead when it comes to human resource management, and 5IR is sure to embrace this change!

5. Paperless technology

As mentioned earlier in this blog, 5IR is all about embracing digitization and intelligent technologies to enhance human efficiency. And paperless technology is doing just that!

Simplifying strenuous paperwork across industries, paperless technology is turning to become the future! Reducing excessive manual effort, to reducing duplication of work and errors, paperless technology is already highly streamlining complex work processes in Banking, Finance, and Law sectors.

Another industry that has hugely benefited from this technology is the field service industry. Going paperless on the field is not just enhancing field service management efficiency but is also enabling field technicians to leverage complete digitization. Mobile field service apps like ReachOut Suite are utilizing these evolving capabilities of mobility and digital forms to empower field workers with streamlined collaboration, easy management, and instant report generation solutions to deliver enhanced customer experiences.

When talking about paperless technology and the future, let’s not forget how machine learning is impacting and shaping this new paperless era. With advanced tools to print, scan, and sign digitally, ML is taking the paperless revolution to the next level, providing a wider scope for paperless offices and digital environments. Here’s a deeper look into how Machine Learning edges us closer to paperless offices!

The 5th Industrial Revolution is Just What We Need

In 5IR, technology will bend back towards the service of humanity, marked by creativity and a common purpose. 5IR will empower us to close the historic gap and create a new socio-economic era. Isn’t that exactly what the world needs?

Fingent offers custom software solutions to address your unique business needs. If there is anything that we could help you with, please connect with us.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Knowledge Representation Models in Artificial Intelligence

Knowledge representation plays a crucial role in artificial intelligence. It has to do with the ‘thinking’ of AI systems and contributes to its intelligent behavior. Knowledge Representation is a radical and new approach in AI that is changing the world. Let’s look into what it is and its applications.

Understanding Knowledge Representation and its Use

Knowledge Representation is a field of artificial intelligence that is concerned with presenting real-world information in a form that the computer can ‘understand’ and use to ‘solve’ real-life problems or ‘handle’ real-life tasks.

The ability of machines to think and act like humans such as understanding, interpreting and reasoning constitute knowledge representation. It is related to designing agents that can think and ensure that such thinking can constructively contribute to the agent’s behavior.

In simple words, knowledge representation allows machines to behave like humans by empowering an AI machine to learn from available information, experience or experts. However, it is important to choose the right type of knowledge representation if you want to ensure business success with AI.

Four Fundamental Types of Knowledge Representation

In artificial intelligence, knowledge can be represented in various ways depending on the structure of the knowledge or the perspective of the designer or even the type of internal structure used. An effective knowledge representation should be rich enough to include the knowledge required to solve the problem. It should be natural, compact and maintainable.

Related Reading: 6 Ways Artificial Intelligence Is Driving Decision Making

Here are the four fundamental types of knowledge representation techniques:

1. Logical Representation

Knowledge and logical reasoning play a huge role in artificial intelligence. However, you often require more than just general and powerful methods to ensure intelligent behavior. Formal logic is the most helpful tool in this area. It is a language with unambiguous representation guided by certain concrete rules. Knowledge representation relies heavily not so much on what logic is used but the method of logic used to understand or decode knowledge.

It allows designers to lay down certain vital communication rules to give and acquire information from agents with minimum errors in communication. Different rules of logic allow you to represent different things resulting in an efficient inference. Hence, the knowledge acquired by logical agents will be definite which means it will either be true or false.

Although working with logical representation is challenging, it forms the basis for programming languages and enables you to construct logical reasoning.

2. Semantic Network

A semantic network allows you to store knowledge in the form of a graphic network with nodes and arcs representing objects and their relationships. It could represent physical objects or concepts or even situations. A semantic network is generally used to represent data or reveal structure. It is also used to support conceptual editing and navigation.

A semantic network is simple and easy to implement and understand. It is more natural than logical representation. It allows you to categorize objects in various forms and then link those objects. It also has greater expressiveness than logic representation.

Related Reading: Understanding The Different Types Of Artificial Intelligence

3. Frame Representation

A frame is a collection of attributes and its associated values, which describes an entity in the real world. It is a record like structure consisting of slots and its values. Slots could be of varying sizes and types. These slots have names and values. Or they could have subfields named as facets. They allow you to put constraints on the frames.

There is no restraint or limit on the value of facets a slot could have, or the number of facets a slot could have or the number of slots a frame could have. Since a single frame is not very useful, building a frame system by collecting frames that are connected to each other will be more beneficial. It is flexible and can be used by various AI applications.

4. Production Rules

Production rule-based representation has many properties essential for knowledge representation. It consists of production rules, working memory, and recognize-act-cycle. It is also called condition-action rules. According to the current database, if the condition of a rule is true, the action associated with the rule is performed.

Although production rules lack precise semantics for the rules and are not always efficient, the rules lead to a higher degree of modularity. And it is the most expressive knowledge representation system.

Gain the Benefits of Knowledge Representation

Used properly, knowledge representation enables artificial intelligence systems to function with near-human intelligence, even handling tasks that require a huge amount of knowledge. The increasing use of natural language also makes it human-like in its responses. Making the right choice in the type of knowledge representation you must incorporate is crucial and will ensure that you get the best out of your artificial intelligence system. If you need help with this, we’re here. Please reach out to us.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

How Extended Reality Is Transforming Business Environments?

Picture yourself diving into crystal-clear Grecian waters or taking a walk on the moon, all the while sitting in the comfort of your home. As fantastical as this may seem now, Extended Reality is making this possible as we speak. What is Extended reality and what are its powerful real-world applications? Let’s check.

Understanding What Extended Reality Is

Extended Reality is a blanket term that encompasses all virtual and real environments generated by computer technology. This includes components such as Virtual Reality, Augmented Reality, and Mixed Reality. Extended Reality is poised to completely revamp the way businesses interact with the media and has the potential to allow seamless interaction between the real and virtual worlds allowing its users to have a completely immersive experience.

Three Remarkable Components of Extended Reality

These components fall under the category of immersive technologies that can affect our perceptions. Here is a little bit about them:

Virtual reality: Virtual reality transposes its users to a different setting through a simulated digital experience. It makes use of a head-mounted display (HMD) to create an immersive experience by simulating as many experiences as possible. Industries such as healthcare and real estate have started using virtual reality significantly.

Augmented reality: As the word suggests, augmented reality takes the existing reality and overlays it with various types of information, enhancing the digital experience. It could be categorized as marker-based, marker-less, and location-based. It has proven useful in medical training, design, modeling and numerous other areas.

Mixed reality: Being the most recent advancement among reality technologies, mixed reality is experienced through mixed reality glasses or headsets where you can interact with physical and digital objects in real-time.

Related Reading: Augmented Reality Vs. Virtual Reality – The Future Technology

The implementation of these technologies through extended reality is enabling businesses to create innovative solutions and increase customer engagement, reduce human error and improve time efficiency.

5 Powerful Real-World Applications

“The market for Extended Reality is expected to have a compound annual growth rate of more than 65% during the forecast period of 2019-2024,” says Mordor Intelligence.

Check out these five real-world applications of extended reality:

1. Entertainment and Gaming

The entertainment and video games industries are the foremost users of Extended Reality. Camera tracking and real-time rendering are combined to create an immersive virtual environment, allowing actors to get the real feel of the scene, thereby improving their performance. The extended reality also allows for multipurpose studio environments, thus reducing the cost of an elaborate movie set.

Video gaming is enhanced by the ability of Extended Reality to create a comprehensive participation effect. This allows users to dive into a completely different reality. Other entertainment events such as exhibitions and live music can also be enhanced by the capabilities of Extended Reality.

2. Employees and Consumers

- Training: Extended Reality allows employees to be trained and educated in low-risk, virtual environments. Medical students, surgeons, firefighters, pilots, and chemists can closely simulate risky scenarios with minimal risk and less expense. The experience they gain will prove invaluable when they handle real-life situations.

- Information: Replacing physical manuals, Extended Reality can enable technicians to focus on the task without having to flip the pages of a manual. It can even connect an expert remotely to the real-time issue for his expert advice. This would save organizations a whole lot of money, and more importantly save them valuable downtime as they wouldn’t need to wait for experts.

- Improve customer perspective: Simulating virtual experience brought on by specific diseases and impairments can help doctors and caregivers receive empathy training.

Related Reading: How Top Brands Embrace Augmented Reality for Immersive Customer Experiences

3. Healthcare

Extended Reality is improving healthcare by streamlining medical procedures while enhancing patient care. Allowing surgeons to visualize the complexities of the organs in 3D, it is enabling them to plan each step of a complicated surgery well in advance. Essentially, it is ensuring that surgeons can perform surgeries in a more safe, effective and precise way.

4. Real Estate

Extended Reality makes it easier for real estate agents and managers to close a deal by enabling prospective homebuyers to get a real feel of the property. The layout scenarios that are enabled by Extended Reality enhance customer experience while providing strong business opportunities.

Related Reading: AR and VR- Game Changers of Real Estate Industry

5. Marketing

Extended Reality enables marketers to give their consumers a ‘try before you buy’ experience. It allows consumers to be transported to a place, immerses them in that world and motivates them to explore it. As an example, Cathay Pacific used a 360◦ video with hotspots to help potential customers experience the brand firsthand. That increased customer awareness by 29% and brand favorability by 25%.

Face the Future with Extended Reality

There are many more advancements and applications to be discovered with Extended Reality, and it is soon going to be imperative to competitive advantage. Make sure your business isn’t left behind. Talk to our expert today.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

3 Reasons to Embrace Prescriptive Analytics in Healthcare

From flagging an unsafe drug interaction to activating a yearly reminder call for a mammogram, healthcare providers are leveraging patient data for a wide array of healthcare tasks. Yet, a worrying number of healthcare providers struggle to understand which one of the big data analytics methods, prescriptive or predictive, is most effective for their business.

Related Reading: 5 Ways Big Data is Changing the Healthcare Industry

Understanding the difference between prescriptive analytics and predictive analytics is the key to finding the right path to viable and productive solutions for your healthcare industry. This blog discusses why you should consider prescriptive analytics rather than predictive analytics to drive value to your business.

Predictive Analytics: The Ability to Forecast What Might Happen

Predictive analytics has been helpful to healthcare providers as they look for evidence-based methods to minimize unnecessary costs and avoid adverse events, which can be prevented. Predictive analytics aims to detect problems even before they occur using historical patterns and modeling. As the word itself suggests, it predicts. It gives you collated and analyzed data that could serve as raw material for informed decision making.

Related Reading: Data Mining and Predictive Analytics: Know The Difference

However, the healthcare industry demands a more robust infrastructure. It needs access to real-time data that allows quick decision-making both clinically and financially. It also requires medical devices that can provide information on the vitals of a patient up to the nanosecond. Based on the information available for the individual patient, clinical decision support systems should be able to provide an accurate diagnosis and the treatment options available. This must take into consideration the latest advances in medicine available as well. That is where prescriptive analytics comes into the picture.

Prescriptive Analytics: Reveals Actionable Next Steps

Prescriptive analytics takes it a step further by providing actionable next steps. If predictive analytics sheds light on the dark alley, prescriptive analytics reveals the stepping stones that would help map out the course of action to be taken. It empowers you to make more accurate predictions and gives you more options so you can make well-defined split-second decisions, which is critical for the healthcare industry.

According to Research and Markets, the global prescriptive and predictive analytics market is expected to reach $28.71 billion by 2026. The reason for such an increase is because prescriptive analytics has the capacity to analyze, sort and learn from data and build on such data more effectively than any human mind can. Hence, the most outstanding benefit of prescriptive analytics is the outcome of the analysis.

Three Reasons to Consider Prescriptive Analysis

MarketWatch states that Healthcare prescriptive analytics market is poised to grow significantly during the forecast period of 2016-2022. Here are 3 reasons why.

1. Sound Clinical Decision-Making Options

Unlike predictive analytics which stops at predicting an upcoming event, prescriptive analytics empowers healthcare providers with the capability to do something about it, helping them take the best action to mitigate or avoid a negative consequence.

To illustrate, a healthcare service provider might be experiencing an inordinately increased number of hospital-acquired infections. Prescriptive analytics wouldn’t just stop at flagging the anomaly and highlighting who would be the next possible patient with vulnerable vitals. It would also point to the nurse who is responsible for spreading that particular infection to all these patients. It could also prevent similar outbreaks in the future by helping healthcare providers develop a sound antibiotic stewardship program.

2. Sound Clinical Action

Prescriptive analytics doesn’t limit itself to interpreting the evidence. It also allows health care providers to consider recommended actions for each of those predicted outcomes. It carefully links clinical priorities and measurable events such as clinical protocols or cost-effectiveness to ensure that viable solutions are recommended.

To illustrate, a healthcare provider might be able to forecast a patient’s likely return to the hospital in the very next month using predictive analysis. On the other hand, prescriptive analytics would be able to drive decisions regarding the associated cost simulation, pending medication, real-time bed counts, and so on. Or, it could help you decide if you need to adjust order sets for in-home follow-up. It empowers the hospital staff to identify the patient with a greater risk of readmission and take needed action to mitigate such risks.

3. Sound Financial Decisions

Prescriptive analytics has the capability to lower the cost of healthcare from patient bills to the cost of running hospital departments. In other words, it helps in making sound financial and operational decisions, providing short-term and long-term solutions to administrative and financial challenges.

Gain the Benefits of Prescriptive Analysis

Prescriptive analytics provides enormous scope and depth as developers improve technologies in the future. It is making truly meaningful advances with regard to the quality and timeliness of patient care and is reducing clinical and financial risks. Are you ready to get on board? Contact us for help.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Fingent Receives High Praise from Leading Businesses Around The World!

In today’s increasingly digital marketplace, technology is key to gaining a competitive advantage. Of course, with technology advancing at an unprecedented rate, determining how to most effectively deploy the latest technologies is easier said than done. As a top custom software development company, we build high-quality, cost-effective web and mobile solutions that deploy the newest technologies and meet all of your enterprise needs.

We’re committed to delivering the best quality and customer service — and for that reason, we’re a trusted partner to some of the world’s leading enterprises and businesses.

We know how important it is for potential buyers to peruse client testimonials and understand what it’s like to work with us before signing a contract, and that’s why we’ve partnered with the ratings and reviews platform Clutch.

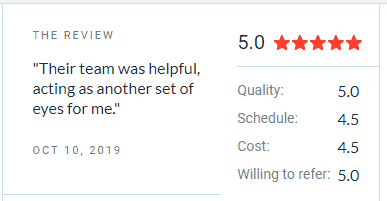

Of more than 300 firms, Clutch ranks us among the top 3 software developers in NY! Clutch determines industry leaders based on market research and client feedback, so we’re immensely grateful to the clients who have left reviews for us. One of our recent reviews was from Wage CALC, a company that provides attorneys and legal professionals with a better way to manage their wage and hour cases with wage & analysis software.

Wage CALC was in need of custom software development services. They were looking for a trustworthy, well-known developer — and they found us on Clutch! Deploying a Microsoft Excel prototype that they’d created, we built their cloud-based app from the ground up.

“I’ve had such an excellent experience with them. Fingent exceeded my expectations.” — Founder & CEO, Wage CALC

The client was impressed that we were able to break down their complex, formula-heavy prototype. They commended our professional and responsive team and were particularly appreciative of how our SVP helped to streamline communication between our team in India and theirs in California.

We received another 5-star review from a large transportation and logistics company that specializes in oil and gas equipment pickup and delivery. There’s a significant technology gap when it comes to processing orders, invoicing, and workflow management within the logistics industry, and we were prepared to change that.

We developed an end-to-end transportation management solution. The solution allows customer service representatives to assign vehicles for order pickup and delivery, while drivers can use a mobile version of the solution to send documentation and process tickets and billing. The company’s accounting team also uses the system to do invoicing, collect payments, and close orders.

“The management system is light years ahead of anything else in our industry.” — IT Director, Transporation Company

The client appreciated our consistency and availability, along with our team’s impressive knowledge and talent.

Meanwhile, The Manifest, Clutch’s partner site listing market experts, ranks us as the #1 custom software development company.

Fingent is not just recognized by the Clutch! A few well-known rating sites like the Software World, have also appreciated Fingent for its expertise in software development and technology solutions.

Learn more on why Fingent is the most trusted Tech Partner for emerging enterprises, and how our expertise can benefit your business. Contact us today — we offer free consultations!

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

2020 Turning The Year Of Multi-Cloud Adoption for Enterprises

There has been a lot of hype going on around businesses adopting multi-cloud strategies that make use of public, private, and hybrid cloud services. Businesses, especially the mid-market and enterprise-level industries can utilize multi-cloud strategy as a smart investment by leveraging the benefits of its resilient performance and virtual infrastructure.

A multi-cloud strategy is all about adopting a mixture of IAAS (Infrastructure As A Service) services from multiple cloud providers and sharing workloads among each of these services which are reliable, secure, flexible, and of course cost-effective.

Why Must Businesses Opt For A Multi-Cloud Strategy?

Businesses can adopt a multi-cloud strategy to acquire an optimal distribution of assets across the user’s cloud-hosting environments. With a multi-cloud strategy, businesses can have access to multiple options such as favorable Service Level Agreement terms and conditions, greater upload speed selection, customizable capacity, cost terms, and many more.

How Can Businesses Make A Multi-Cloud Adoption Decision?

Multi-cloud adoption decisions are based on 3 major considerations:

- Sourcing – Agility can be improved and chances of vendor lock-in can be avoided or minimized by sourcing. This decision can be driven by factors such as performance, data sovereignty, availability, regulatory requirements, and so on.

- Architecture – Architecture is a major decision-driver as many modern applications are mostly of modular fashion that can span multiple cloud providers and obtain services from any number of clouds.

- Governance – Businesses can now standardize policies, procedures, processes, and even share tools that can enable cost governance. By adopting services from multiple cloud providers, enterprises can now ensure operational control, unify administrative processes, and monitor their IT systems more effectively and efficiently.

Better disaster recovery and easier migration are the other key benefits that drive enterprises to adopt multi-cloud strategies.

Related Reading: Cloud Computing Trends To Expect In 2020

Top 7 Reasons To Adopt Multi-Cloud For Your Business

-

Ability To Find The Best-In-Class Multi-Cloud Providers

Businesses administrators can bring in the best-in-class cloud hosting providers for each task that best suits their requirements. In a recent survey by Gartner, 81% of respondents said that the multi-cloud approach proved beneficial to them. Businesses are free to make their decisions based on the sourcing, architecture, and governance factors as mentioned above.

-

Agility

According to a recent study by RightScale, organizations leverage almost 5 different cloud platforms on average. This figure shows the transformation of enterprises increasingly towards multi-cloud environments. Businesses struggling with legacy IT systems, hardware suppliers, and on-premise structures can benefit from adopting multi-cloud infrastructures to improve agility as well as workload mobility amongst heterogeneous cloud platforms.

-

Flexibility And Scalability

With a competent multi-cloud adoption, enterprises can now scale their storage up or down based on their requirements. A multi-cloud environment is a perfect place for the storage of data with proper automation as well as real-time syncing. Based on the requirements of individual data segments, businesses can depend on multiple cloud vendors specifically. For improved scalability, enterprises must focus on achieving the following 4 key factors:

- A single view of each cloud asset

- Portable application design

- The capability to automate and orchestrate across multiple clouds

- Improved workload placement

-

Network Performance Improvement

With a multi-cloud interconnection, enterprises can now create high-speed, low-latency infrastructures. This helps to reduce the costs associated with integrating clouds with the existing IT system. When businesses extend their networks to multiple providers in this manner, proximity is ensured and low-latency connections are established that in turn improves the application’s response time along with providing the user a better experience.

-

Improved Risk Management

Risk management is a great advantage that multi-cloud strategies can provide businesses with. For instance, consider the case where a vendor has an infrastructure meltdown or an attack. A multi-cloud user can mitigate the risk by switching to another service provider or back up or to a private cloud, immediately. Adopting redundant, independent systems that provide robust authentication features, vulnerability testing as well as API assets consolidation ensure proper risk management.

-

Prevention Of Vendor Lock-In

With a multi-cloud strategy, enterprises can evaluate the benefits, terms, and pitfalls of multiple service providers and can choose the option to switch to another vendor after negotiation and careful validation. Analyzing terms and conditions before signing a partnership with a vendor can prevent vendor lock-in situations.

-

Competitive Pricing

Enterprises can choose between the vendors and select the best-suited based on their offerings such as adjustable contracts, flexible payment schemes, the capacity to customize, and many other features.

To learn more about adopting an effective multi-cloud strategy and the benefits it offers, drop us a call and talk to an expert.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

It’s Time to Bid Goodbye to the Legacy Technology!

The decade’s end has seen numerous inevitable changes in the technology market. It hasn’t been long since we bid adieu to Python 2, and now Microsoft Silverlight is nearing its end-of-life!

This surely brings a million questions to your curious mind!

Why did Microsoft decide to end all support for Silverlight? What are the next best alternatives available in the market? And most of all, is it okay to still keep using Silverlight?

Read on as we answer it all!

What is Microsoft Silverlight?

Silverlight, an application framework designed by Microsoft, has been driving rich media on the internet since 2007. Created as an alternative to Adobe Flash, this free, browser focused developer tool facilitated web development by enabling computers and browsers to utilize UI elements and associated plugins for rich media streaming. With the emergence of video streaming platforms like Netflix and Amazon Prime, Silverlight turned out to be a great option to enable sophisticated effects.

So What Led To The Demise of Microsoft Silverlight?

A couple of things, but mostly Silverlight could not catch up with the rapidly evolving software market!

When Microsoft Silverlight was released in 2007, it looked like a huge success. Especially with the successful online streaming of the huge Beijing Olympics coverage in 2008, the political conventions of 2008, and the 2011 Winter Olympics, Silverlight was on a roll, later pulling in major video streaming platforms like Netflix and Amazon Prime onboard.

However, Silverlight could not shine for long. A few problems started to surface soon. Bugs in several applications were just one manifestation. The worst issues came about with Microsoft misjudging the real requirements of the market.

Although Silverlight reduced the user’s dependency on Flash to access rich graphics, animations, videos, and live streams online, it did so with a heavy reliance on Microsoft tools at the backend. Using Microsoft .Net Framework and XAML coding format, Silverlight offered the support for Windows Media Audio(WMA), Windows Media Video(WMV), advanced audio coding and the rest.

This seemed difficult, as well as risky for developers, especially to depend on a single vendor’s framework. Meanwhile, constant push to upgrade Silverlight made things more complicated, leaving developers more comfortable adopting low cost opens source alternatives like Flash and JavaScript over Silverlight. With HTML5 -and other browser standards on the rise, Silverlight became an outlier in the market.

In 2013, the Redmond giant stopped the development of Silverlight but continued to roll out bug fixes and patches regularly. In September 2015, Google Chrome ended support for Silverlight, followed by Firefox in March 2017. Microsoft-edge does not support Silverlight plug-ins at all, and with modern browsers transitioning to HTML5, Microsoft did not see any need to keep maintaining this application framework.

So, it’s official! Microsoft has announced the support end date for Silverlight to be on October 12, 2021.

And what is Netflix going to do? Well, Netflix currently supports Silverlight 4 and Silverlight 5. So Netflix viewers, using it on Windows XP or Windows 7 PC (both themselves now unsupported) can use either the Silverlight plug-in or HTML5 player.

What Happens After October 2021?

Not to worry, there won’t be a big boom on October 12, 2021!

It is true that Silverlight will be completely unsupportive after the said date and will no longer receive any future quality or security updates. But however, Microsoft is not preventing or terminating any Silverlight applications for now.

So should you still be using Silverlight?

Well, no! Fewer users will be able to still use Silverlight driven apps. However, this would turn worse, with developers wanting to work in a dead-end development environment, which will immensely raise the cost of supporting Silverlight apps.

What Are The Next Best Options?

No doubt Microsoft Silverlight has served as a great option for developing rich apps. However, with the end of support for Silverlight, here’s listing a couple of new tech stacks that promises to be more reliable alternatives.

AngularJS, a popular framework maintained by Google is simply a great option for developers around the world. It is an open-source framework designed to address the challenges of web development processes and offers ease in integrating with HTML code and application modules. Moreover, it automatically synchronizes with modules that make the development process seamless, and following a DOM methodology, it focuses on improving performance and testability. Adding to this, AngularJS uses basic HTML that enables building rich internet applications effectively. Also, with an MVC built architecture and various extensions, this technology proves to be a great option for designing applications that are dynamic and responsive.

ReactJS is another application framework that can easily be labeled as a “best seller”, based on the popularity and affection it has gained in the developer community. Launched in 2013, the ReactJS framework is today well regarded and used by leading companies like Apple, PayPal, Netflix, and of course Facebook. React Native is a variant of the ReactJS JavaScript library that combines native application development with JavaScript UI development, to build web pages that are highly dynamic and user-responsive. While native modules allow implementing platform-specific features for iOS and Android, the rest of the code is written with JavaScript and shared across platforms.

Related Reading: React Native Or Flutter – The Better Choice For Mobile App Development

With technologies running in and disappearing from the market, it can be quite difficult to decide on the stack of digital tools that would best fit your business. Our business and solution experts can help ensure that you transform with the right technology to meet industry challenges and enhance your revenue opportunities. To discuss more on how we can help you identify the right technology for your company, get in touch with our experts today!

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Testing Types And Strategies: Choosing A Testing Method

Understanding the basics of software testing is crucial for developers and quality assurance specialists equally. To deploy a better software and to find bugs that affect application development, it is important to learn about the different types of software testing.

Types Of Software Testing

Testing is a process of executing a software program to find errors in the application being developed. Testing is critical for deploying error-free software programs. Each type of testing has its advantages and benefits. Software testing is broadly categorized into two types; Functional and Non-Functional testing.

Functional Testing Versus Non-Functional Testing

Functional Testing is used to verify the functions of a software application according to the requirements specification. Functional testing mainly involves black box testing and does not depend on the source code of the application.

Functional Testing involves checking User Interface, Database, APIs, Client/Server applications as well as security and functionality of the software under test. Functional testing can be done either manually or by making use of automation.

The various types of Functional Testing include the following:

- Unit Testing

- Integration Testing

- System Testing

- Sanity Testing

- Smoke Testing

- Interface Testing

- Regression Testing

- Beta/Acceptance Testing

Non-Functional Testing is done to check the non-functional aspects such as performance, usability, reliability, and so on of the application under test.

The various types of Non-Functional Testing include the following:

- Performance Testing

- Load Testing

- Stress Testing

- Volume Testing

- Security Testing

- Compatibility Testing

- Install Testing

- Recovery Testing

- Reliability Testing

- Usability Testing

- Compliance Testing

- Localization Testing

The 7 Most Common Types Of Software Testing

Type 1: Black-box Testing

Black-box testing is applied to verify the functionality of the software by just focusing on the various inputs and outputs of the application rather than going deep into its internal structure, design, or implementation. Black-box testing is performed from the user’s perspective.

Type 2: White-Box Testing

The White-Box software testing strategy tests an application with access to the actual source code as well as focusing on the internal structure, design, and implementation. This testing method is known by different names such as Open Box testing, Clear Box Testing, Glass Box Testing, Transparent Box Testing, Code-Based Testing, and Structural Testing. White-box testing offers the advantage of rapid problem and bug spotting.

Type 3: Acceptance Testing

Acceptance Testing is a QA (Quality Assurance) process that determines to what extent a software attains the end user’s approval. Also known as UAT (User Acceptance Testing) or system testing, it can be testing the usability or the functionality of the system or even both. Depending on the enterprise, acceptance testing can take the form of either end-user testing, beta testing, application testing, or field testing. The advantage of acceptance testing is that usability issues can be discovered and fixed at an early stage.

Related Reading: Quality Assurance in Software Testing – Past, Present & Future

Type 4: Automated Testing

Automated testing is a method in which specialized tools are utilized to control the execution of various tests and the verification of the results is automated. This type of testing compares the actual results against the expected results. The advantage of automated testing is that it avoids the need for running through test cases manually, which is both tedious and error-prone, especially while working in an agile environment.

Type 5: Regression Testing

Regression testing is a testing practice that verifies whether the system is still working fine, even after incremental development in the application. Most automated tests performed are regression tests. It ensures that any change in the source code does not have any adverse effects on the application.

Type 6: Functional Testing

Functional Testing tests for the actual functionality of the software. This type of testing focuses on the results of the system processing and not on how the processing takes place. During functional testing, the internal structure of the system is not known to the tester.

Type 7: Exploratory Testing

As the name indicates, Exploratory testing is all about exploring the application where the tester is constantly on the lookout for what and where to test. This approach is applied in cases where there is no or poor documentation and when there is limited time left for the testing process to be completed.

Related Reading: A Winning Mobile Testing Strategy: The Way to Go

All the methods mentioned above are only some of the most common options of software testing. The list is huge and specific methods are adopted by development vendors based on the project requirements. Sometimes, the terminologies used by each organization to define a testing method also differ from one another. However, the concept remains the same. Depending on the project requirement and scope variations, the testing type, processes, and implementation strategies keep changing.

Like to know more about Fingent’s expertise in custom software development and testing? Get in touch with our expert.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Understanding the Importance of Times Series Forecasting

To be able to see the future. Wouldn’t that be wonderful! We probably will get there someday, but time series forecasting gets you close. It gives you the ability to “see” ahead of time and succeed in your business. In this blog, we will look at what time series forecasting is, how machine learning helps in investigating time-series data, and explore a few guiding principles and see how it can benefit your business.

What Is Time Series Forecasting?

The collection of data at regular intervals is called a time series. Time series forecasting is a technique in machine learning, which analyzes data and the sequence of time to predict future events. This technique provides near accurate assumptions about future trends based on historical time-series data.

The book Time Series Analysis: With Applications in R describes the twofold purpose of time series analysis, which is “to understand or model the stochastic mechanism that gives rise to an observed series and to predict or forecast the future values of a series based on the history of that series.”

Time series allows you to analyze major patterns such as trends, seasonality, cyclicity, and irregularity. Time series analysis is used for various applications such as stock market analysis, pattern recognition, earthquake prediction, economic forecasting, census analysis and so on.

Related Reading: Can Machine Learning Predict And Prevent Fraudsters?

Four Guiding Principles for Success in Time Series Forecasting

1. Understand the Different Time Series Patterns

Time series includes trend cycles and seasonality. Unfortunately, many confuse seasonal behavior with cyclic behavior. To avoid confusion, let’s understand what they are:

- Trend: An increase or decrease in data over a period of time is called a trend. They could be deterministic, which provides an underlying rationale, or stochastic, which is a random feature of time series.

- Seasonal: Oftentimes, seasonality is of a fixed and known frequency. When a time series is affected by seasonal factors like the time of the year or the day of the week, a seasonal pattern occurs.

- Cyclic: When a data exhibit fluctuates, a cycle occurs. But unlike seasonal, it is not of a fixed frequency.

2. Use Features Carefully

It is important to use features carefully, especially when their future real values are unclear. However, if the features are predictable or have patterns you will be able to build a forecast model based on them. Using predicted values as features is risky as it can cause substantial errors and provide a biased result. Properties of a time series and time-related features that can be calculated could be added to time series models. Mistakes in handle features could easily get compounded resulting in extremely skewed results, so extreme caution is in order.

Related Reading: Machine Learning Vs Deep Learning: Statistical Models That Redefine Business

3. Be Prepared to Handle Smaller Time Series

Don’t be quick to dismiss smaller time series as a drawback. All time-related datasets are useful in time series forecasting. A smaller dataset wouldn’t require external memory for your computer, which makes it easier to analyze the entire dataset and make plots that could be analyzed graphically.

4. Choose The Right Resolution

Having a clear idea of the objectives of your analysis will help yield better results. It will reduce the risk of propagating the error to the total. An unbiased model’s residuals would either be zero or close to zero. A white noise series is expected to have all autocorrelations close to zero. In other words, choosing the right resolution will also eliminate noisy data that makes modeling difficult.

Types of Time Series Data and Forecasts

Times series basically deals with three types of data – time-series data, cross-sectional data, and pooled data, which is a combination of time series data and cross-sectional data. Large amounts of data give you the opportunity for exploratory data analysis, model fidelity and model testing and tuning. The question you could ask yourself is, how much data is available and how much data am I able to collect?

There are different types of forecasting that could be applied depending on the time horizon. They are near-future, medium-future and long-term future predictions. Think carefully about which time horizon prediction you need.

Organizations should be able to decide which forecast works best for their firm. A rolling forecast will re-forecast the next twelve months, whereas the traditional, or a static annual forecast creates new forecasts towards the end of the year. Think about whether you want your forecasts updated regularly or you need a more static approach.

By allowing you to harness down-sampling and up-sampling data, the concept of temporal hierarchies can mitigate modeling uncertainty. It is important to ask yourself, what temporal frequencies require forecasts?

Keep Up With Time

As businesses grow more dynamic, forecasting will get increasingly harder because of the increasing amount of data needed to build the Time Series Forecasting model. Still, implementing the principles outlined in this blog will help your organization be better equipped for success. If you have any questions on how to do this, just drop us a message.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new