Category: Technology

3 Reasons to Embrace Prescriptive Analytics in Healthcare

From flagging an unsafe drug interaction to activating a yearly reminder call for a mammogram, healthcare providers are leveraging patient data for a wide array of healthcare tasks. Yet, a worrying number of healthcare providers struggle to understand which one of the big data analytics methods, prescriptive or predictive, is most effective for their business.

Related Reading: 5 Ways Big Data is Changing the Healthcare Industry

Understanding the difference between prescriptive analytics and predictive analytics is the key to finding the right path to viable and productive solutions for your healthcare industry. This blog discusses why you should consider prescriptive analytics rather than predictive analytics to drive value to your business.

Predictive Analytics: The Ability to Forecast What Might Happen

Predictive analytics has been helpful to healthcare providers as they look for evidence-based methods to minimize unnecessary costs and avoid adverse events, which can be prevented. Predictive analytics aims to detect problems even before they occur using historical patterns and modeling. As the word itself suggests, it predicts. It gives you collated and analyzed data that could serve as raw material for informed decision making.

Related Reading: Data Mining and Predictive Analytics: Know The Difference

However, the healthcare industry demands a more robust infrastructure. It needs access to real-time data that allows quick decision-making both clinically and financially. It also requires medical devices that can provide information on the vitals of a patient up to the nanosecond. Based on the information available for the individual patient, clinical decision support systems should be able to provide an accurate diagnosis and the treatment options available. This must take into consideration the latest advances in medicine available as well. That is where prescriptive analytics comes into the picture.

Prescriptive Analytics: Reveals Actionable Next Steps

Prescriptive analytics takes it a step further by providing actionable next steps. If predictive analytics sheds light on the dark alley, prescriptive analytics reveals the stepping stones that would help map out the course of action to be taken. It empowers you to make more accurate predictions and gives you more options so you can make well-defined split-second decisions, which is critical for the healthcare industry.

According to Research and Markets, the global prescriptive and predictive analytics market is expected to reach $28.71 billion by 2026. The reason for such an increase is because prescriptive analytics has the capacity to analyze, sort and learn from data and build on such data more effectively than any human mind can. Hence, the most outstanding benefit of prescriptive analytics is the outcome of the analysis.

Three Reasons to Consider Prescriptive Analysis

MarketWatch states that Healthcare prescriptive analytics market is poised to grow significantly during the forecast period of 2016-2022. Here are 3 reasons why.

1. Sound Clinical Decision-Making Options

Unlike predictive analytics which stops at predicting an upcoming event, prescriptive analytics empowers healthcare providers with the capability to do something about it, helping them take the best action to mitigate or avoid a negative consequence.

To illustrate, a healthcare service provider might be experiencing an inordinately increased number of hospital-acquired infections. Prescriptive analytics wouldn’t just stop at flagging the anomaly and highlighting who would be the next possible patient with vulnerable vitals. It would also point to the nurse who is responsible for spreading that particular infection to all these patients. It could also prevent similar outbreaks in the future by helping healthcare providers develop a sound antibiotic stewardship program.

2. Sound Clinical Action

Prescriptive analytics doesn’t limit itself to interpreting the evidence. It also allows health care providers to consider recommended actions for each of those predicted outcomes. It carefully links clinical priorities and measurable events such as clinical protocols or cost-effectiveness to ensure that viable solutions are recommended.

To illustrate, a healthcare provider might be able to forecast a patient’s likely return to the hospital in the very next month using predictive analysis. On the other hand, prescriptive analytics would be able to drive decisions regarding the associated cost simulation, pending medication, real-time bed counts, and so on. Or, it could help you decide if you need to adjust order sets for in-home follow-up. It empowers the hospital staff to identify the patient with a greater risk of readmission and take needed action to mitigate such risks.

3. Sound Financial Decisions

Prescriptive analytics has the capability to lower the cost of healthcare from patient bills to the cost of running hospital departments. In other words, it helps in making sound financial and operational decisions, providing short-term and long-term solutions to administrative and financial challenges.

Gain the Benefits of Prescriptive Analysis

Prescriptive analytics provides enormous scope and depth as developers improve technologies in the future. It is making truly meaningful advances with regard to the quality and timeliness of patient care and is reducing clinical and financial risks. Are you ready to get on board? Contact us top software development company for help.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Fingent Receives High Praise from Leading Businesses Around The World!

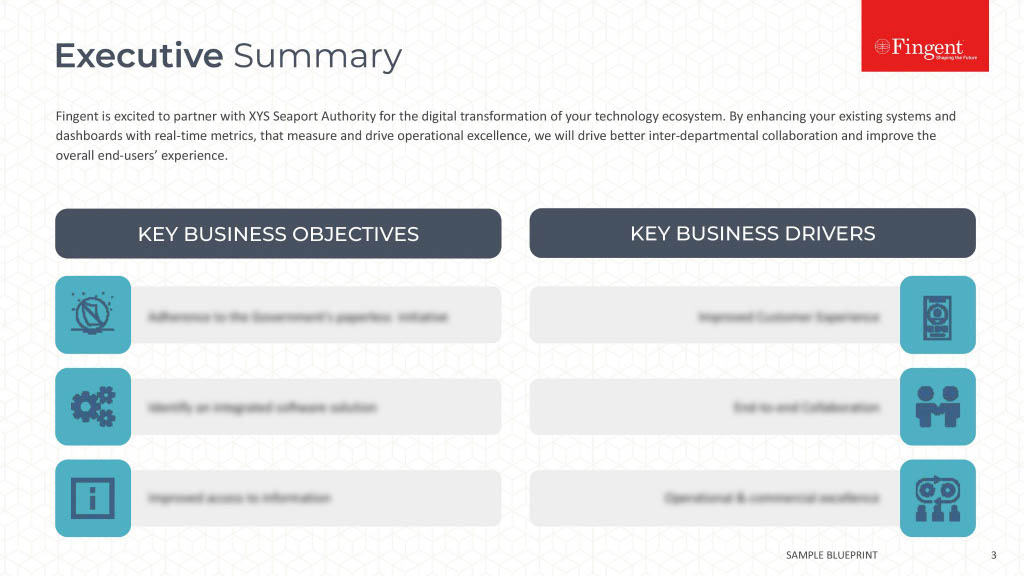

In today’s increasingly digital marketplace, technology is key to gaining a competitive advantage. Of course, with technology advancing at an unprecedented rate, determining how to most effectively deploy the latest technologies is easier said than done. As a top custom software development company, we build high-quality, cost-effective web and mobile solutions that deploy the newest technologies and meet all of your enterprise needs.

We’re committed to delivering the best quality and customer service — and for that reason, we’re a trusted partner to some of the world’s leading enterprises and businesses.

We know how important it is for potential buyers to peruse client testimonials and understand what it’s like to work with us before signing a contract, and that’s why we’ve partnered with the ratings and reviews platform Clutch.

Of more than 300 firms, Clutch ranks us among the top 3 software developers in NY! Clutch determines industry leaders based on market research and client feedback, so we’re immensely grateful to the clients who have left reviews for us. One of our recent reviews was from Wage CALC, a company that provides attorneys and legal professionals with a better way to manage their wage and hour cases with wage & analysis software.

Wage CALC was in need of custom software development services. They were looking for a trustworthy, well-known developer — and they found us on Clutch! Deploying a Microsoft Excel prototype that they’d created, we built their cloud-based app from the ground up.

“I’ve had such an excellent experience with them. Fingent exceeded my expectations.” — Founder & CEO, Wage CALC

The client was impressed that we were able to break down their complex, formula-heavy prototype. They commended our professional and responsive team and were particularly appreciative of how our SVP helped to streamline communication between our team in India and theirs in California.

We received another 5-star review from a large transportation and logistics company that specializes in oil and gas equipment pickup and delivery. There’s a significant technology gap when it comes to processing orders, invoicing, and workflow management within the logistics industry, and we were prepared to change that.

We developed an end-to-end transportation management solution. The solution allows customer service representatives to assign vehicles for order pickup and delivery, while drivers can use a mobile version of the solution to send documentation and process tickets and billing. The company’s accounting team also uses the system to do invoicing, collect payments, and close orders.

“The management system is light years ahead of anything else in our industry.” — IT Director, Transporation Company

The client appreciated our consistency and availability, along with our team’s impressive knowledge and talent.

Meanwhile, The Manifest, Clutch’s partner site listing market experts, ranks us as the #1 custom software development company.

Fingent is not just recognized by the Clutch! A few well-known rating sites like the Software World, have also appreciated Fingent for its expertise in software development and technology solutions.

Learn more on why Fingent is the most trusted Tech Partner for emerging enterprises, and how our expertise can benefit your business. Contact us today — we offer free consultations!

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Turning The Year Of Multi-Cloud Adoption for Enterprises

There has been a lot of hype going on around businesses adopting multi-cloud strategies that make use of public, private, and hybrid cloud services. Businesses, especially the mid-market and enterprise-level industries can utilize multi-cloud strategy as a smart investment by leveraging the benefits of its resilient performance and virtual infrastructure.

A multi-cloud strategy is all about adopting a mixture of IAAS (Infrastructure As A Service) services from multiple cloud providers and sharing workloads among each of these services which are reliable, secure, flexible, and of course cost-effective.

Why Must Businesses Opt For A Multi-Cloud Strategy?

Businesses can adopt a multi-cloud strategy to acquire an optimal distribution of assets across the user’s cloud-hosting environments. With a multi-cloud strategy, businesses can have access to multiple options such as favorable Service Level Agreement terms and conditions, greater upload speed selection, customizable capacity, cost terms, and many more.

How Can Businesses Make A Multi-Cloud Adoption Decision?

Multi-cloud adoption decisions are based on 3 major considerations:

- Sourcing – Agility can be improved and chances of vendor lock-in can be avoided or minimized by sourcing. This decision can be driven by factors such as performance, data sovereignty, availability, regulatory requirements, and so on.

- Architecture – Architecture is a major decision-driver as many modern applications are mostly of modular fashion that can span multiple cloud providers and obtain services from any number of clouds.

- Governance – Businesses can now standardize policies, procedures, processes, and even share tools that can enable cost governance. By adopting services from multiple cloud providers, enterprises can now ensure operational control, unify administrative processes, and monitor their IT systems more effectively and efficiently.

Better disaster recovery and easier migration are the other key benefits that drive enterprises to adopt multi-cloud strategies.

Related Reading: Cloud Computing Trends To Expect In 2020

Top 7 Reasons To Adopt Multi-Cloud For Your Business

-

Ability To Find The Best-In-Class Multi-Cloud Providers

Businesses administrators can bring in the best-in-class cloud hosting providers for each task that best suits their requirements. In a recent survey by Gartner, 81% of respondents said that the multi-cloud approach proved beneficial to them. Businesses are free to make their decisions based on the sourcing, architecture, and governance factors as mentioned above.

-

Agility

According to a recent study by RightScale, organizations leverage almost 5 different cloud platforms on average. This figure shows the transformation of enterprises increasingly towards multi-cloud environments. Businesses struggling with legacy IT systems, hardware suppliers, and on-premise structures can benefit from adopting multi-cloud infrastructures to improve agility as well as workload mobility amongst heterogeneous cloud platforms.

-

Flexibility And Scalability

With a competent multi-cloud adoption, enterprises can now scale their storage up or down based on their requirements. A multi-cloud environment is a perfect place for the storage of data with proper automation as well as real-time syncing. Based on the requirements of individual data segments, businesses can depend on multiple cloud vendors specifically. For improved scalability, enterprises must focus on achieving the following 4 key factors:

- A single view of each cloud asset

- Portable application design

- The capability to automate and orchestrate across multiple clouds

- Improved workload placement

-

Network Performance Improvement

With a multi-cloud interconnection, enterprises can now create high-speed, low-latency infrastructures. This helps to reduce the costs associated with integrating clouds with the existing IT system. When businesses extend their networks to multiple providers in this manner, proximity is ensured and low-latency connections are established that in turn improves the application’s response time along with providing the user a better experience.

-

Improved Risk Management

Risk management is a great advantage that multi-cloud strategies can provide businesses with. For instance, consider the case where a vendor has an infrastructure meltdown or an attack. A multi-cloud user can mitigate the risk by switching to another service provider or back up or to a private cloud, immediately. Adopting redundant, independent systems that provide robust authentication features, vulnerability testing as well as API assets consolidation ensure proper risk management.

-

Prevention Of Vendor Lock-In

With a multi-cloud strategy, enterprises can evaluate the benefits, terms, and pitfalls of multiple service providers and can choose the option to switch to another vendor after negotiation and careful validation. Analyzing terms and conditions before signing a partnership with a vendor can prevent vendor lock-in situations.

-

Competitive Pricing

Enterprises can choose between the vendors and select the best-suited based on their offerings such as adjustable contracts, flexible payment schemes, the capacity to customize, and many other features.

To learn more about adopting an effective multi-cloud strategy and the benefits it offers, drop us a call and talk to an expert.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

It’s Time to Bid Goodbye to the Legacy Technology!

The decade’s end has seen numerous inevitable changes in the technology market. It hasn’t been long since we bid adieu to Python 2, and now Microsoft Silverlight is nearing its end-of-life!

This surely brings a million questions to your curious mind!

Why did Microsoft decide to end all support for Silverlight? What are the next best alternatives available in the market? And most of all, is it okay to still keep using Silverlight?

Read on as we answer it all!

What is Microsoft Silverlight?

Silverlight, an application framework designed by Microsoft, has been driving rich media on the internet since 2007. Created as an alternative to Adobe Flash, this free, browser focused developer tool facilitated web development by enabling computers and browsers to utilize UI elements and associated plugins for rich media streaming. With the emergence of video streaming platforms like Netflix and Amazon Prime, Silverlight turned out to be a great option to enable sophisticated effects.

So What Led To The Demise of Microsoft Silverlight?

A couple of things, but mostly Silverlight could not catch up with the rapidly evolving software market!

When Microsoft Silverlight was released in 2007, it looked like a huge success. Especially with the successful online streaming of the huge Beijing Olympics coverage in 2008, the political conventions of 2008, and the 2011 Winter Olympics, Silverlight was on a roll, later pulling in major video streaming platforms like Netflix and Amazon Prime onboard.

However, Silverlight could not shine for long. A few problems started to surface soon. Bugs in several applications were just one manifestation. The worst issues came about with Microsoft misjudging the real requirements of the market.

Although Silverlight reduced the user’s dependency on Flash to access rich graphics, animations, videos, and live streams online, it did so with a heavy reliance on Microsoft tools at the backend. Using Microsoft .Net Framework and XAML coding format, Silverlight offered the support for Windows Media Audio(WMA), Windows Media Video(WMV), advanced audio coding and the rest.

This seemed difficult, as well as risky for developers, especially to depend on a single vendor’s framework. Meanwhile, constant push to upgrade Silverlight made things more complicated, leaving developers more comfortable adopting low cost opens source alternatives like Flash and JavaScript over Silverlight. With HTML5 -and other browser standards on the rise, Silverlight became an outlier in the market.

In 2013, the Redmond giant stopped the development of Silverlight but continued to roll out bug fixes and patches regularly. In September 2015, Google Chrome ended support for Silverlight, followed by Firefox in March 2017. Microsoft-edge does not support Silverlight plug-ins at all, and with modern browsers transitioning to HTML5, Microsoft did not see any need to keep maintaining this application framework.

So, it’s official! Microsoft has announced the support end date for Silverlight to be on October 12, 2021.

And what is Netflix going to do? Well, Netflix currently supports Silverlight 4 and Silverlight 5. So Netflix viewers, using it on Windows XP or Windows 7 PC (both themselves now unsupported) can use either the Silverlight plug-in or HTML5 player.

What Happens After October 2021?

Not to worry, there won’t be a big boom on October 12, 2021!

It is true that Silverlight will be completely unsupportive after the said date and will no longer receive any future quality or security updates. But however, Microsoft is not preventing or terminating any Silverlight applications for now.

So should you still be using Silverlight?

Well, no! Fewer users will be able to still use Silverlight driven apps. However, this would turn worse, with developers wanting to work in a dead-end development environment, which will immensely raise the cost of supporting Silverlight apps.

What Are The Next Best Options?

No doubt Microsoft Silverlight has served as a great option for developing rich apps. However, with the end of support for Silverlight, here’s listing a couple of new tech stacks that promises to be more reliable alternatives.

AngularJS, a popular framework maintained by Google is simply a great option for developers around the world. It is an open-source framework designed to address the challenges of web development processes and offers ease in integrating with HTML code and application modules. Moreover, it automatically synchronizes with modules that make the development process seamless, and following a DOM methodology, it focuses on improving performance and testability. Adding to this, AngularJS uses basic HTML that enables building rich internet applications effectively. Also, with an MVC built architecture and various extensions, this technology proves to be a great option for designing applications that are dynamic and responsive.

ReactJS is another application framework that can easily be labeled as a “best seller”, based on the popularity and affection it has gained in the developer community. Launched in 2013, the ReactJS framework is today well regarded and used by leading companies like Apple, PayPal, Netflix, and of course Facebook. React Native is a variant of the ReactJS JavaScript library that combines native application development with JavaScript UI development, to build web pages that are highly dynamic and user-responsive. While native modules allow implementing platform-specific features for iOS and Android, the rest of the code is written with JavaScript and shared across platforms.

Related Reading: React Native Or Flutter – The Better Choice For Mobile App Development

With technologies running in and disappearing from the market, it can be quite difficult to decide on the stack of digital tools that would best fit your business. Our business and solution experts can help ensure that you transform with the right technology to meet industry challenges and enhance your revenue opportunities. To discuss more on how we can help you identify the right technology for your company, get in touch with our custom software development experts today!

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Testing Types And Strategies: Choosing A Testing Method

Understanding the basics of software testing is crucial for developers and quality assurance specialists equally. To deploy a better software and to find bugs that affect application development, it is important to learn about the different types of software testing.

Types Of Software Testing

Testing is a process of executing a software program to find errors in the application being developed. Testing is critical for deploying error-free software programs. Each type of testing has its advantages and benefits. Software testing is broadly categorized into two types; Functional and Non-Functional testing.

Functional Testing Versus Non-Functional Testing

Functional Testing is used to verify the functions of a software application according to the requirements specification. Functional testing mainly involves black box testing and does not depend on the source code of the application.

Functional Testing involves checking User Interface, Database, APIs, Client/Server applications as well as security and functionality of the software under test. Functional testing can be done either manually or by making use of automation.

The various types of Functional Testing include the following:

- Unit Testing

- Integration Testing

- System Testing

- Sanity Testing

- Smoke Testing

- Interface Testing

- Regression Testing

- Beta/Acceptance Testing

Non-Functional Testing is done to check the non-functional aspects such as performance, usability, reliability, and so on of the application under test.

The various types of Non-Functional Testing include the following:

- Performance Testing

- Load Testing

- Stress Testing

- Volume Testing

- Security Testing

- Compatibility Testing

- Install Testing

- Recovery Testing

- Reliability Testing

- Usability Testing

- Compliance Testing

- Localization Testing

The 7 Most Common Types Of Software Testing

Type 1: Black-box Testing

Black-box testing is applied to verify the functionality of the software by just focusing on the various inputs and outputs of the application rather than going deep into its internal structure, design, or implementation. Black-box testing is performed from the user’s perspective.

Type 2: White-Box Testing

The White-Box software testing strategy tests an application with access to the actual source code as well as focusing on the internal structure, design, and implementation. This testing method is known by different names such as Open Box testing, Clear Box Testing, Glass Box Testing, Transparent Box Testing, Code-Based Testing, and Structural Testing. White-box testing offers the advantage of rapid problem and bug spotting.

Type 3: Acceptance Testing

Acceptance Testing is a QA (Quality Assurance) process that determines to what extent a software attains the end user’s approval. Also known as UAT (User Acceptance Testing) or system testing, it can be testing the usability or the functionality of the system or even both. Depending on the enterprise, acceptance testing can take the form of either end-user testing, beta testing, application testing, or field testing. The advantage of acceptance testing is that usability issues can be discovered and fixed at an early stage.

Related Reading: Quality Assurance in Software Testing – Past, Present & Future

Type 4: Automated Testing

Automated testing is a method in which specialized tools are utilized to control the execution of various tests and the verification of the results is automated. This type of testing compares the actual results against the expected results. The advantage of automated testing is that it avoids the need for running through test cases manually, which is both tedious and error-prone, especially while working in an agile environment.

Type 5: Regression Testing

Regression testing is a testing practice that verifies whether the system is still working fine, even after incremental development in the application. Most automated tests performed are regression tests. It ensures that any change in the source code does not have any adverse effects on the application.

Type 6: Functional Testing

Functional Testing tests for the actual functionality of the software. This type of testing focuses on the results of the system processing and not on how the processing takes place. During functional testing, the internal structure of the system is not known to the tester.

Type 7: Exploratory Testing

As the name indicates, Exploratory testing is all about exploring the application where the tester is constantly on the lookout for what and where to test. This approach is applied in cases where there is no or poor documentation and when there is limited time left for the testing process to be completed.

Related Reading: A Winning Mobile Testing Strategy: The Way to Go

All the methods mentioned above are only some of the most common options of software testing. The list is huge and specific methods are adopted by development vendors based on the project requirements. Sometimes, the terminologies used by each organization to define a testing method also differ from one another. However, the concept remains the same. Depending on the project requirement and scope variations, the testing type, processes, and implementation strategies keep changing.

Like to know more about Fingent’s expertise in custom software development and testing? Get in touch with our expert.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Understanding the Importance of Times Series Forecasting

To be able to see the future. Wouldn’t that be wonderful! We probably will get there someday, but time series forecasting gets you close. It gives you the ability to “see” ahead of time and succeed in your business. In this blog, we will look at what time series forecasting is, how machine learning helps in investigating time-series data, and explore a few guiding principles and see how it can benefit your business.

What Is Time Series Forecasting?

The collection of data at regular intervals is called a time series. Time series forecasting is a technique in machine learning, which analyzes data and the sequence of time to predict future events. This technique provides near accurate assumptions about future trends based on historical time-series data.

The book Time Series Analysis: With Applications in R describes the twofold purpose of time series analysis, which is “to understand or model the stochastic mechanism that gives rise to an observed series and to predict or forecast the future values of a series based on the history of that series.”

Time series allows you to analyze major patterns such as trends, seasonality, cyclicity, and irregularity. Time series analysis is used for various applications such as stock market analysis, pattern recognition, earthquake prediction, economic forecasting, census analysis and so on.

Related Reading: Can Machine Learning Predict And Prevent Fraudsters?

Four Guiding Principles for Success in Time Series Forecasting

1. Understand the Different Time Series Patterns

Time series includes trend cycles and seasonality. Unfortunately, many confuse seasonal behavior with cyclic behavior. To avoid confusion, let’s understand what they are:

- Trend: An increase or decrease in data over a period of time is called a trend. They could be deterministic, which provides an underlying rationale, or stochastic, which is a random feature of time series.

- Seasonal: Oftentimes, seasonality is of a fixed and known frequency. When a time series is affected by seasonal factors like the time of the year or the day of the week, a seasonal pattern occurs.

- Cyclic: When a data exhibit fluctuates, a cycle occurs. But unlike seasonal, it is not of a fixed frequency.

2. Use Features Carefully

It is important to use features carefully, especially when their future real values are unclear. However, if the features are predictable or have patterns you will be able to build a forecast model based on them. Using predicted values as features is risky as it can cause substantial errors and provide a biased result. Properties of a time series and time-related features that can be calculated could be added to time series models. Mistakes in handle features could easily get compounded resulting in extremely skewed results, so extreme caution is in order.

Related Reading: Machine Learning Vs Deep Learning: Statistical Models That Redefine Business

3. Be Prepared to Handle Smaller Time Series

Don’t be quick to dismiss smaller time series as a drawback. All time-related datasets are useful in time series forecasting. A smaller dataset wouldn’t require external memory for your computer, which makes it easier to analyze the entire dataset and make plots that could be analyzed graphically.

4. Choose The Right Resolution

Having a clear idea of the objectives of your analysis will help yield better results. It will reduce the risk of propagating the error to the total. An unbiased model’s residuals would either be zero or close to zero. A white noise series is expected to have all autocorrelations close to zero. In other words, choosing the right resolution will also eliminate noisy data that makes modeling difficult.

Types of Time Series Data and Forecasts

Times series basically deals with three types of data – time-series data, cross-sectional data, and pooled data, which is a combination of time series data and cross-sectional data. Large amounts of data give you the opportunity for exploratory data analysis, model fidelity and model testing and tuning. The question you could ask yourself is, how much data is available and how much data am I able to collect?

There are different types of forecasting that could be applied depending on the time horizon. They are near-future, medium-future and long-term future predictions. Think carefully about which time horizon prediction you need.

Organizations should be able to decide which forecast works best for their firm. A rolling forecast will re-forecast the next twelve months, whereas the traditional, or a static annual forecast creates new forecasts towards the end of the year. Think about whether you want your forecasts updated regularly or you need a more static approach.

By allowing you to harness down-sampling and up-sampling data, the concept of temporal hierarchies can mitigate modeling uncertainty. It is important to ask yourself, what temporal frequencies require forecasts?

Keep Up With Time

As businesses grow more dynamic, forecasting will get increasingly harder because of the increasing amount of data needed to build the Time Series Forecasting model. Still, implementing the principles outlined in this blog will help your organization be better equipped for success. If you have any questions on how to do this, just drop us a message.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

A Look Into The Cloud Computing Trends for 2024

“Fewer, but larger, public cloud platform providers and a maturing SaaS ecosystem will dominate enterprise cloud spending” – The Public Cloud Market Outlook, 2019 To 2022 Forrester Report.

Organizations are recognizing the importance of cloud computing and are adopting the technology steadily over the past few years. With recent technological advancements creating new excitement around the idea of cloud computing, the adoption is now skyrocketing!

According to Gartner, the worldwide public cloud services market will gain a positive growth of 17% in 2020. That is an increase from $227.8 billion in 2019 to 266.4 billion in 2020. This makes it vital for organizations to identify the forces that will shape the cloud computing market this year. This article will help you with this as we discuss five specific trends that will transform cloud computing in 2024.

Why Keep Up with Cloud Computing?

Aggregated mostly around Amazon, Google and Microsoft, the cloud market underwent a profound change in the recent past. The pace for cloud adoption and innovation will inevitably continue to accelerate across industries and regions providing new opportunities, and new levels of quality and efficiency. The question you must be asking is: What is in store for the cloud computing market and how should you prepare for it in 2024?

1. Shifting Gears from Multi-Cloud to Hybrid-Cloud

2019 has seen how organizations routinely deployed workloads across multiple clouds. In order to achieve expected outcomes in business, organizations will have to adopt the right and appropriate cloud strategy. A hybrid cloud computing structure uses an orchestration of local servers, private cloud, and third-party public cloud services to achieve desired results. According to The RightScale 2019 State of The Cloud Report, the hybrid cloud adoption rate was estimated at 58% last year.

In this transitional era, the hybrid-cloud will become an integral part of the long-term vision for industries on how they will meet their needs. It can provide a seamless experience to enterprises and help them solve complicated challenges around latency. Customers too won’t have to deal with two different pieces of infrastructure; on-premise and public cloud. Thus, the shift to a hybrid-cloud will make things easier for both the organization as well as the customers.

Related Reading: Hybrid Cloud Infrastructure: How It Benefits Your Business

2. Serverless Computing

“Serverless computation is going to fundamentally change not only the economics of what is back-end computing, but it’s going to be the core of the future of distributed computing,” says Satya Nadella, Chief Executive Officer at Microsoft. This comment clearly shows what the future of serverless computing is.

Serverless computing ensures that developers must only focus on their core product without worrying about operating and managing the servers. This is an advantage that moves enterprises to adopt serverless computing. According to Gartner, more than 20% of global enterprises will deploy serverless computing technologies by 2020.

3. Cloud Security will Become Paramount

Many organizations feel that cloud computing could pose security issues. They might have concerns about regulatory and privacy issues, along with compliance and governance issues. Consequently, security features of public data have become the key focus in coming years. It will not be just about access controls or policy creations. Aspects such as data encryption, cloud workload security, and threat intelligence will gain priority as part of an organization’s security measures. In future we will see security features such as privileged access management and shared responsibility models.

According to Kristin Davis of 42crunch.com, 2019 became the year where API Security threats came to notice. As the year progressed, we have observed a lot of high profile API breaches and vulnerabilities, including the ones at Facebook, Amazon Ring, GitHub, Cisco, Kubernetes, Uber, Verizon, etc. In their October 2019 report, Gartner estimates that by 2021, exposed APIs will form a larger attack surface than UIs for 90% of web-enabled applications. In coming years, we expect API security getting to the top of the agenda of a chief information security officer. Also, DevOps tools and processes are expanding to DevSecOps, to lower the risks and implement security by design.

Mihai Corbuleac, Senior IT Consultant at StratusPointIT predicts security acquisitions to make more headlines in 2020, it has made the headlines over the last year. It is because all cloud companies that can’t develop in-house modern security solutions have to look to buy them.

Related Reading: How Secure is Your Business in a Multi-Cloud Environment

4. Digital Natives

As the workforce evolves, the expectations of the workers will definitely increase. Those joining the workforce will be well-acquainted with cloud computing and its advantages. Such workers are called ‘digital natives.’

Organizations will have two sets of workers as a consequence: those who have adopted digital best practices and those who have not. This would call for a need to train the second set of workers, which is called ‘reverse mentoring.’ The adoption of cloud computing and related technologies will enable organizations to integrate both the workgroups into one unified workforce.

5. Quantum Computing

Quantum computing requires massive hardware developments. This opens up the potential to exponentially increase the efficiency of computers in coming years. It allows computers and their servers to process more rapidly than ever before. Quantum computing also has the potential to limit energy consumption. It requires lesser consumption of electricity while generating massive amounts of computing energy. Best of all, quantum computing can have a positive effect on the environment and the economy.

Are You Keeping Up the Pace?

Whether you are a large organization or a small one, cloud computing will remain a compelling, fast-moving force in future. Adopting cloud computing technology will enable organizations to mitigate risks and capitalize on opportunities. Ultimately, organizations will have a number of decisions to make with regards to cloud computing. It will include deciding when and how to adopt cloud computing technology, as well as for deciding on the specific model they would like to adopt.

Related Reading: Cloud Migration: Essentials to Know Before You Jump on the Bandwagon

With years of experience in helping clients transform their business by the power of the cloud, Fingent can help you understand and implement this technology seamlessly in your business. Contact us to know more.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Every new project in an organization goes through an analysis phase. The information collected during the analysis forms the backbone for critical decisions with regards to the complexity, resources, frameworks, time schedule, cost, etc. Over the years, there have been several techniques to simplify the project analysis phase, but most of them still remain inadequate when considering the accuracy of the outcome. Even clearly defined projects can fall out during the later stages without an accurate analysis methodology in place.

Mitigation of risk in software projects turns out to be of prime importance. Usually, it starts with delineating precise measurements concerning the scope, performance, duration, quality and other key efficiency metrics of the project. Advanced analysis techniques like Function Point Analysis (FPA) bring a clear picture regarding each of these metrics, chiefly related to the project scope, staffing, cost and time, which helps in the management, control, customization of software development right from its initial planning phases.

Function Point Analysis is a standardized method used commonly as an estimation technique in software engineering. First defined by Allan J. Albrecht in 1979 at IBM, Function Point Analysis, has since then underwent several modifications, mainly by the International Function Point Users Group (IFPUG).

What is Function Point Analysis?

In simple words, FPA is a technique used to measure software requirements based on the different functions that the requirement can be split into. Each function is assigned with some points based on the FPA rules and then these points are summarized using the FPA formula. The final figure shows the total man-hours required to achieve the complete requirement.

Components of Function Point Analysis

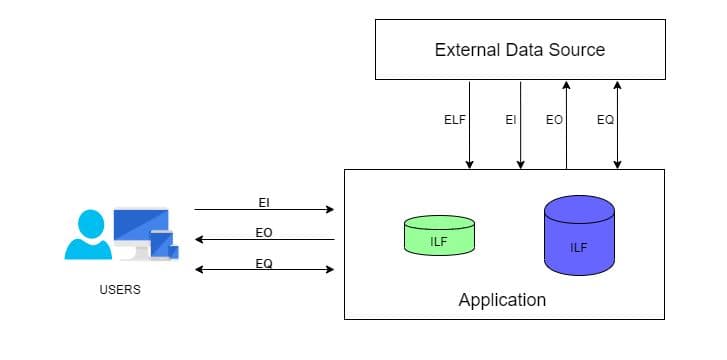

Based on the interaction of the system components internally and with external users, applications, etc they are categorized into five types:

- External Inputs (EI): This is the process of capturing inputs from users like control information or business information and store it as internal/external logic database files.

- External Outputs (EO): This is the process of sending out data to external users or systems. The data might be directly grabbed from database files or might undergo some system-level processing.

- Inquiries (EQ): This process includes both input and output components. The data is then processed to extract relevant information from internal/external database files.

- Internal Logic File (ILF): This is the set of data present within the system. The majority of the data will be interrelated and are captured via the inputs received from the external sources.

- External Logic File (ELF): This is the set of data from external resources or external applications. The majority of the data from the external resource is used by the system for reference purposes.

Below are some abbreviations which need to be understood to know the logic in-depth:

Data Element Type (DET): This can be defined as a single, unique, non-repetitive data field.

Record Element Type (RET): This can be defined as a group of DETs. In a more generic way, we can call this a table of data fields.

File Type Referenced (FTR): This can be defined as a file type referenced by a transaction (Input/Output/Inquiry). This can be either an Internal logic file or an external interface file.

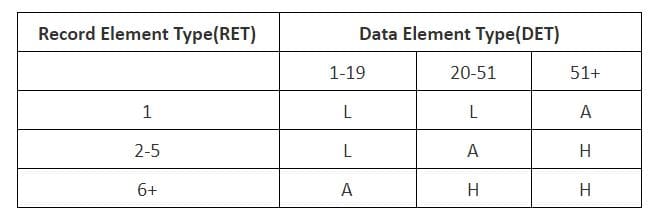

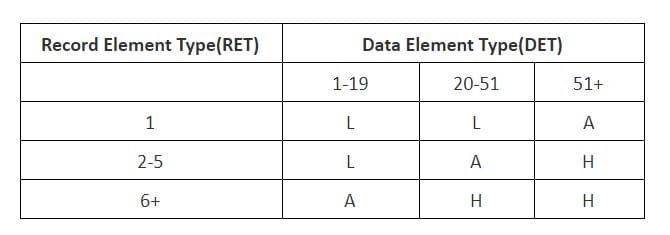

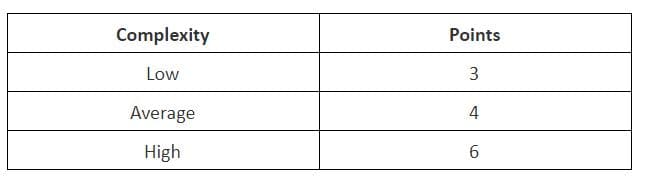

Based on the number of DETs and RETs, all the five components of FPA are classified into High, Average and Low complexity based on the below table.

For Internal Logical Files

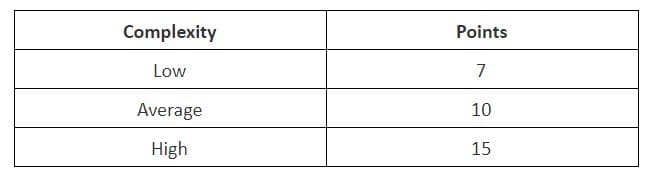

And based on the complexity, the FPA points are calculated

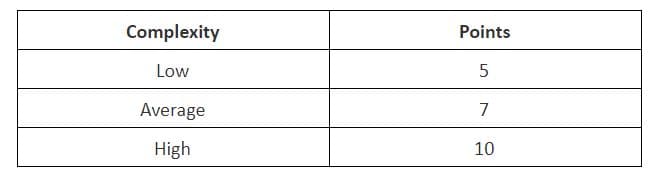

For External Logical Files

And based on the complexity, the FPA points are calculated

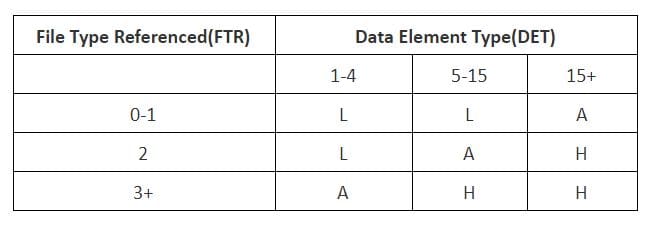

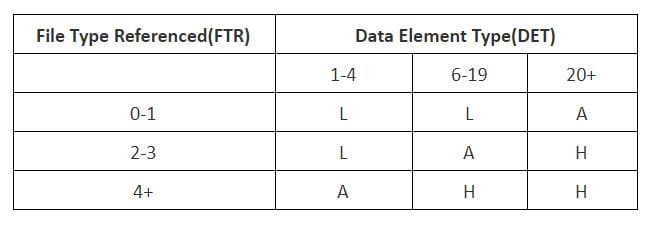

For External Input Transactions

As the External input is a Transactional type, the complexity is judged based on FTR instead of RET.

And based on the complexity, the FPA points are calculated

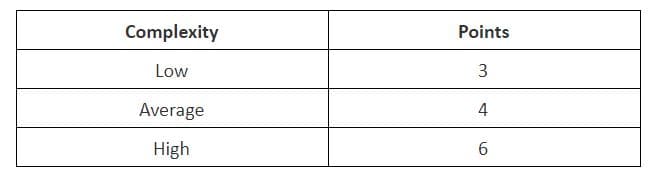

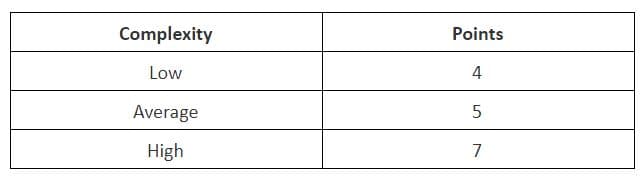

For External Output Transactions

As External Output is a Transactional type the complexity is judged based on FTR instead of RET.

And based on the complexity, the FPA points are calculated

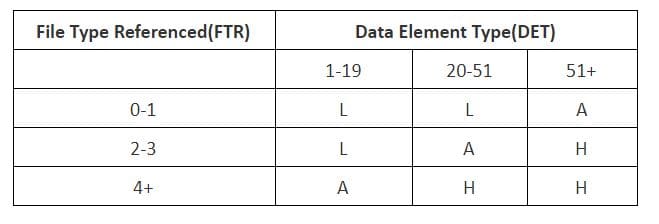

For Inquiries

As Inquiries is a Transactional type the complexity is judged based on FTR instead of RET.

And based on the complexity, the FPA points are calculated

As we now have the reference chart to find the complexity of each variety of functions discovered in the system and that we also have the Points that should be assigned based on the complexity of each component. We can now look into the calculation.

Steps to Count the Function Points

Below are the steps used in counting the function points of a system.

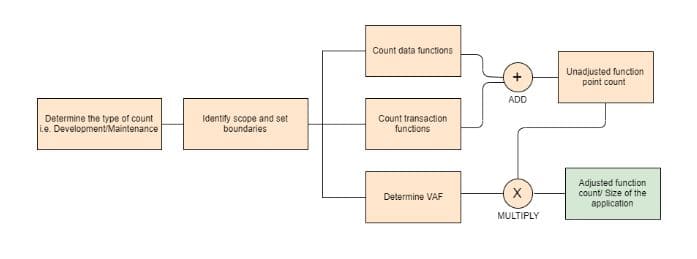

1. Type of count: The very first step of this process is to determine the type of function count. There are 3 types of function point (FP) count.

- Development Project FP Count: This measures the functions that are directly involved in the development of the final system. This would include all the phases of the project from requirements gathering to the first installation.

- Enhancement Project FP Count: This measures the functions involved in the modifications brought in the system. That is the changes made to the system after production.

- Application FP count: This measures the functions involved in the final deliverable excluding the effort of already existing functions that may have existed.

2. Scope and Boundary of the Count: In the second step, the scope and boundary of the functions are identified. Boundary indicates the border between the application being measured and the external applications. Scope can be decided with the help of data screens, reports, and files.

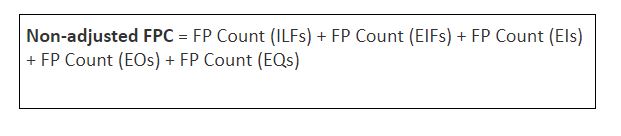

3. Unadjusted Function Point Count: This is the main step of this process where all the function points produced from the above FPA components (External Inputs, External Output, Internal Logic files, External Logic files, Inquiries) are added together and labeled as unadjusted function point count.

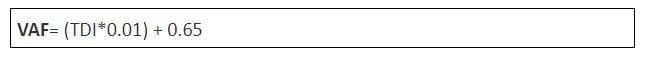

4. Value Adjustment Factor: In this step the value adjustment factor is determined. VAF contains 14 General system characteristics(GSC) of the system or application that defines the types of application characteristics and is rated on a scale of 0 to 5. The sum of all the 14 GSC rates are calculated to give out a mathematical value and is labeled as Total Degree Influence(TDI). TDI is used in the calculation of VAF and its value may vary from 0 to 35.

Below are the 14 GSCs listed and the mathematical formula for calculating the VAF.

- Data communications

- Distributed data processing

- Performance

- Heavily used configuration

- Transaction rate

- On-Line data entry

- End-user efficiency

- On-Line update

- Complex processing

- Reusability

- Installation ease

- Operational ease

- Facilitate change

- Multiple sites

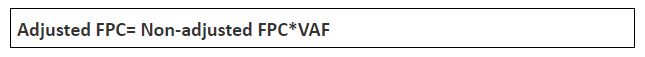

Once the unadjusted function point and value adjustment factor is calculated, the Adjusted Functional point count is found out using the two values. This is done with the help of the following formula.

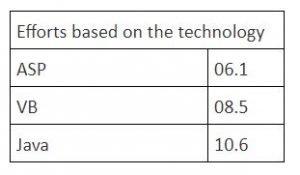

The Adjusted FPC is then multiplied with a numeric value, which is the effort based on the technology. Some of the examples are below.

If the technology selected for a particular requirement is Java, then the formula to calculate the final hours are as follows:

FPC = (Non-adjusted FPC*VAF) * 10.6

This will give the total hours of effort required to achieve the requirement under analysis.

Merits of Function Point Analysis

- FPA measures the size of the solution instead of the size of the problem

- It helps in estimating overall project costs, schedule, and effort

- It is independent of technology/programming language

- It helps in the estimation of testing projects

- FPA gives clarity in contract negotiations as it provides a method of easier communication with business groups

Related Read: Quality Assurance in Software Testing – Past, Present & Future

References

- International Function Point User Group (IFPUG) – https://www.ifpug.org/

- ProfessionalQA.com – http://www.professionalqa.com/functional-point-analysis

- Geeksforgeeks – https://www.geeksforgeeks.org/software-engineering-functional-point-fp-analysis/

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

How AI and Voice Search Will Impact Your Business

“It is common now for people to say ‘I love you’ to their smart speakers,” says Professor Trevor Cox, Acoustic engineer, Salford University.

The Professor wasn’t exactly talking about the love affair between robots and humans, but his statement definitely draws attention to the growing importance of voice search technology in our lives. AI-driven voice computing technology has drastically changed the way we interact with our smart devices and it is bound to have a further impact as we move into coming years.

In this blog, we will consider six key predictions for AI-Driven voice computing in 2024.

How Essential Is AI-Driven Voice Search For Businesses?

Voice search is becoming increasingly popular and is evolving day after day. It can support basic tasks at home, organize and manage work, and the clincher – it makes shopping so much easier. No doubt about it, AI-driven voice search and conversational AI are capturing the center stage.

Related Reading: Why you can and should give your app the ability to listen and speak

Voice-based shopping is expected to hit USD 40 billion in 2022. In other words, more and more consumers will be expecting to interact with brands on their own terms and would like to have fully personalized experiences. As the number of consumers opting for voice-based searches keeps increasing, businesses have no option than to go all-in with AI-driven voice search. With that in mind, let’s see where this is going to be leading businesses in future.

Six key predictions for AI-driven voice search and conversational AI in 2024

1. Voicing a human experience in conversational AI

Chatbots are excellent, but the only downside is that most of them lack human focus. They only provide information, which is great in itself, but not enough to provide the top-notch personalized experience that consumers are looking for. This calls for a paradigm shift in conversational design where the tone, emotion, and personality of humans are incorporated into bot technologies.

Statista reports that by 2020, 50% of all internet searches will be generated through voice search. Hence, developers are already working on a language that would be crisp, one that is typically used in the film industry. Such language could also be widely used on various channels such as websites and messaging platforms.

Related Reading: Capitalizing on AI Chatbots Will Redefine Your Business: Here’s How

2. Personalization

A noteworthy accomplishment in voice recognition software enhancing personalization is the recent developments in Alexa’s voice profiling capabilities. Personalization capabilities already in place for consumers are now being made available to skill developers as part of the Alexa Skills Kit. This will allow developers to improve customers’ overall experience by using their created voice profiles.

Such personalization can be based on gender, language, age and other aspects of the user. Voice assistants are building the capacity to cater even to the emotional state of users. Some developers are aiming to create virtual entities that could act as companions or councilors.

3. Security will be addressed

Hyper personalization will require that businesses acquire large amounts of data related to each individual customer. According to a Richrelevance study, 80% of consumers demand AI transparency. They have valid reasons to be concerned about their security. This brings the onus on developers to make voice computing more secure, especially for voice payments.

4. Natural conversations

Both Google and Amazon assistants had a wake word to initiate a new command. But recently it was revealed that both companies are considering reducing the frequency of the wake word such as “Alexa.” This would eliminate the need to say the wake word again and again. It would ensure that their consumers enjoy more natural, smooth and streamlined conversations.

5. Compatibility and integration

There are several tasks a consumer can accomplish while using voice assistants such as Amazon’s Alexa or Google’s Assistant. They can control lights, appliances, smart home devices, make calls, play games, get cooking tips, and more. What the consumer expects is the integration of their devices with the voice assistant. Coming years will see a greatly increased development of voice-enabled devices.

6. Voice push notifications

Push notification is the delivery of information to a computing device. These notifications can be read by the user even when the phone is locked. It is a unique way to increase user engagement. Now developers of Amazon’s Alexa and Google Assistant have integrated voice push notifications which allow its users to listen to their notifications if they prefer hearing over reading them.

What Does It Mean for Your Business In 2024?

AI-driven voice computing and conversational AI is going to change all aspects of where, when and how you engage and communicate with your consumers. In coming years, IDC estimates a double-digit growth in the smart home market. Wherever they are and whatever channel they are using, you will be required to hold seamless conversations with your customers across various channels.

“Early bird catches the worm.” Be the first in your industry to adopt and gain the benefits of voice search and conversational AI. Call us top custom software development company and find out how we can make this happen for you.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new

Talk To Our Experts

Attaining Digital Transformation Success with AIOps

Your IT infrastructure is the key pillar of your organization and this dependency will only increase steadily in future. You need help to cope with this massive dependency, and digital transformation will play a crucial role in this. Now, in order to make your digital transformation successful, you need something powerful, radical and looking up to the next-gen. That is what AIOps is.

Related Reading: How Digital Innovation Transformed Today’s Business World

This blog will discuss how organizations can apply AIOps to drive digital transformation and make your IT operations a success for the future of your business.

Defining AIOps and Its Crucial Role

AIOps was initially used by Gartner in 2017 as an acronym for Artificial Intelligence for IT operations. AIOps is a beautiful synchronization of machine learning, analytics, and AI. These technologies are brought together in order to derive meaning from massive datasets. It pools all kinds of data gathered from different sources and uses advanced AI and ML operations to enhance a wide range of IT operations. The insights derived are far beyond what human analysis could achieve.

As IT infrastructure is becoming progressively complex with the demands of digital transformation, this potential of AIOps is becoming critical to successful IT development. Traditional methods of managing your complex infrastructure could increase your costs, create maintenance issues and increase possibilities of slowdowns. AIOps can equip your IT teams to overcome such problems, trends, and slowdowns. It allows your teams to prioritize and focus on the most important information while AIOps reduces normal alert noises and identifies patterns automatically without human input.

Related Reading: How IT-as-a-Service Boost the Digital Transformation of Enterprises

In fact, AIOps is becoming a necessity for every organization. Gartner predicts that 30% of large enterprises will adopt AIOps by 2023. According to another research, AIOps platform market is expected to grow to $11.02 billion by 2023. Hence, the question larger organizations should ask themselves is not “if” they need to adopt AIOps, but “when.”

How is AIOps Driving Digital Transformation?

Since digital transformation includes cloud adoptions, quick change and implementation of new technologies, it requires a shift in focus. Instead of users struggling with traditional services and performance management strategies and tools, AIOps offers organizations a perfect model to handle digital transformation. It can help your team to manage the speed, scale, and complexity of changes, which are the key challenges of digital transformation.

Here are some essential steps to effective AIOps:

1. Act Fast

Timing is everything in business and hesitation in the adoption of technologies can set you back more than you can imagine. Even if you feel that you aren’t ready to adopt AIOps yet, read about it and familiarize yourself with the vocabulary and capabilities of AIOps. It will help you make an informed decision when it is the right time.

2. Start Small

All in or all out doesn’t necessarily apply to digital transformation. Starting small could actually prove beneficial to your organization. This would mean that you focus on what is practical and achievable. Your initial use cases could include application performance monitoring, dynamic baselining, predictive event management, and event-driven automation.

3. Restructure Your Team

Successful adoption of AIOps might require restructuring the roles of your team. This would ensure that the best resources are used for the right jobs. Also, identify experience gaps and fill those gaps by providing the necessary training.

Related Reading: Fingent Speaks: What it Takes to Build a Successful Digital Transformation Strategy

4. Leverage Available Resources

Your organization might already have data and analytic resources. Since these teams are already skilled in data management, their skill set can be effectively leveraged for AIOps.

5. Increase Proficiency by Developing Core Capabilities

Developing core capabilities such as machine learning, open data access, and big data can prove beneficial. For example, the massive amount of data generated by digital transformation can be overwhelming. Since the AIOps platform must support responsive ad-hoc data exploration and deep queries, developing this capability can also help you build up progress towards the use of AIOps.

6. Track Business Value

Make sure that the value of AIOps is tied to your overall business objective. The key performance indicators must correlate with best practices and should remain measurable. Ensure that your business is able to obtain a complete and referenceable history of such values.

What Is Your Plan of Action?

AIOps might be taking its first steps, but it is what will eventually drive your digital transformation with unmatched speed and stability. Selecting the right use cases might be challenging initially and might require significant process reengineering. Fingent top custom software development company, can help you get there. Call us to find out more.

Stay up to date on what's new

Featured Blogs

Stay up to date on

what's new